The access patterns deployed in Vector Search significantly impact storage, query efficiency, and infrastructure alignment, which are consequential in optimizing your retrieval system for your intended application.

The static in-memory access pattern is ideal when working with a relatively small set of vectors, typically fewer than one million, that don't change frequently.

In this pattern, the entire vector set is loaded into your application's memory, enabling quick and efficient retrieval, without external databases or storage. This setup ensures blazing-fast access times, making it perfect for low data scenarios requiring real-time retrieval.

You can use libraries like NumPy and Facebook AI Similarity Search (FAISS) to implement static access in Python. These tools allow direct cosine similarity queries on your in-memory vectors.

For smaller vector sets (less than 100,000 vectors), NumPy may be efficient, especially for simple cosine similarity queries. However, if the vector corpus grows substantially or the query requirements become more complex, an "in-process vector database" like LanceDB or Chroma is better.

During service restarts or shutdowns, static in-memory access requires you to reload the entire vector set into memory. This costs time and resources and affects the system's overall performance during the initialization phase.

In sum, static in-memory access is suitable for compact vector sets that remain static. It offers fast and efficient real-time access directly from memory.

But when the vector corpus grows substantially and is updated frequently, you’ll need a different access pattern: dynamic access.

Dynamic access is particularly useful for managing larger datasets, typically exceeding one million vectors, and scenarios where vectors are subject to frequent updates – for example, sensor readings, user preferences, real-time analytics, and so on. Dynamic access relies on specialized databases and search tools designed for managing and querying high-dimensional vector data; these databases and tools efficiently handle access to evolving vectors and can retrieve them in real-time, or near real-time.

Several types of technologies allow dynamic vector access, each with its own tradeoffs:

Vector-Native Vector Databases (e.g., Weaviate, Pinecone, Milvus, Vespa, Qdrant): are designed specifically for vector data, and optimized for fast, efficient similarity searches on high-dimensional data. However, they may not be as versatile when it comes to traditional data operations.

Hybrid Databases (e.g., MongoDB, PostgreSQL with pgvector, Redis with VSS module): offer a combination of traditional and vector-based operations, providing greater flexibility in managing data. However, hybrid databases may not perform vector searches at the same level or with the same vector-specific features as dedicated vector databases.

Search Tools (e.g., Elasticsearch): are primarily created to handle text search but also provide some Vector Search capabilities. Search tools like Elasticsearch let you perform both text and Vector Search operations, without needing a fully featured database.

Here's a simplified side-by-side comparison of each database type’s pros and cons:

| Type | Pros | Cons |

|---|---|---|

| Vector-Native Vector Databases | High performance for vector search tasks | May not be as versatile for traditional data operations |

| Hybrid Databases | Support for both traditional and vector operations | Slightly lower efficiency in handling vector tasks |

| Search Tools | Accommodate both text and vector search tasks | May not provide the highest efficiency in vector tasks |

Batch processing is an optimal approach when working with large vector sets, typically exceeding one million, that require collective, non-real-time processing. This pattern is particularly useful for Vector Management tasks such as model training or precomputing nearest neighbors, which are crucial steps in building Vector Search services.

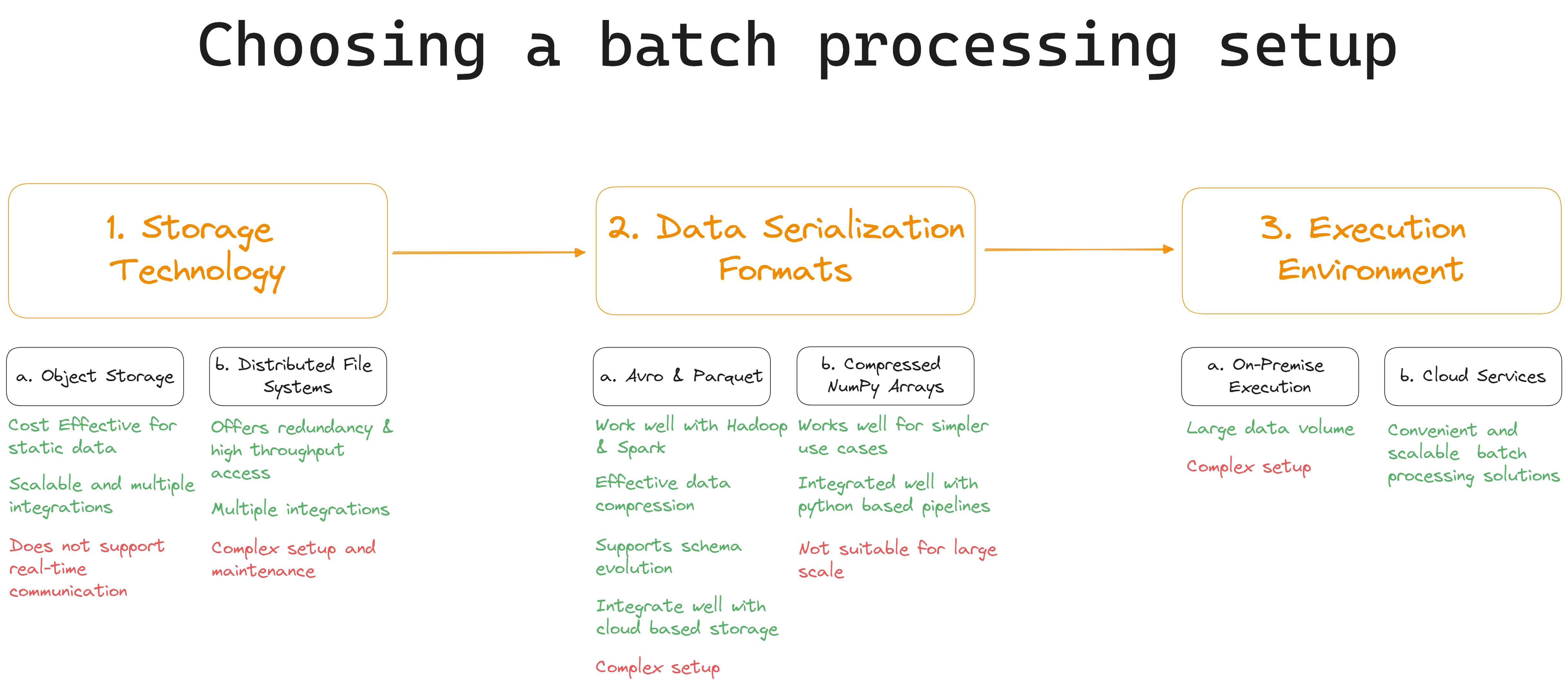

To be sure your batch processing setup fits your application, you need to keep the following key considerations in mind:

1. Storage Technologies: You need scalable, reliable storage to house your vectors during the batch pipeline. Various technologies offer different levels of efficiency:

2. Data Serialization Formats: For storing vectors efficiently, you need compact data formats that conserve space and support fast read operations:

3. Execution Environment: You have two primary options for executing batch-processing tasks:

In short, which storage technology, data serialization format, and execution environment you choose for your batch processing use case depends on various considerations, including:

Stay updated with VectorHub

Continue Reading