The research discussed in this article has now been open-sourced and made available for you to try, including the embeddings, here

In our contemporary data-centric world, embeddings have become indispensable for converting complex and varied data into numerical representations that are both manageable and analytically powerful.

Across a spectrum of industries, from e-commerce to healthcare, these embeddings enable machines to interpret, analyze, and make predictions from large-scale datasets containing textual and/or visual information.

Traditionally, models have relied on unimodal data, typically either images or text, but not both. However, the advent of multimodal models, which can synergize various data forms, has proven to be a game-changer. Multimodal approaches surpass the limitations of unimodal methods, offering richer contextual insights and enhanced predictive capabilities, and paving the way for more sophisticated and accurate applications across diverse sectors.

Below, we carry out various text and image embedding experiments using COCO and Open Images V7 datasets, showcasing different unimodal and multimodal embedding models, and assessing their effectiveness using ranking metrics. By the end, you'll have an understanding of how to embed multimodal data. We'll also evaluate the performance of unimodal vs. multimodal embeddings, and how different multimodal models stack up against each other.

Our datasets must satisfy two essential criteria:

Publicly available datasets that meet these criteria are rare. Common Objects in Context (COCO) and Open Images V7 are notable exceptions. Both datasets are extensively utilized as benchmark datasets for object detection, segmentation, and image captioning tasks.

COCO comprises images from 80 object categories, each image accompanied by 5 unique, human-written captions that distinctively describe objects present in the image. Open Images V7 encompasses a significantly larger number of distinct object categories - approximately 20,245. In addition to captions, Open Images V7 introduces Localized Narratives - human audio descriptions - for each image segment, identified by mouse hovering. Each subpart of the Localized Narrative is accompanied by a timestamp. An illustrative example can be found here. In our experiments, we leverage the textual representation of these Localized Narratives as captions.

COCO and Open Images V7 fulfill our essential dataset criteria; we can identify which images contain object sets (e.g., keyboard, mouse, person, TV) in any particular image, and ensure that at least two images have the identical object set by excluding images with object sets that appear only once. Based on label set frequency distribution, these outliers are removed from the COCO dataset. The resulting COCO and the Open Images V7 datasets contain 103,429 and 149,847 samples, respectively.

Here's an example image from the COCO dataset, and below it, the human-written captions corresponding to the image's object set.

Example image (above) from the COCO dataset, with corresponding human-written captions (below).

A young boy standing in front of a computer keyboard. A little boy wearing headphones and looking at a computer monitor. He is listening intently to the computer at school. A young boy stares up at the computer monitor. A young kid with head phones on using a computer.

In our experiments below, we vectorize/embed, respectively, 1) image captions, 2) images, 3) both images and their captions, 4) images with multimodal transformers, 5) both images and their captions with multimodal transformers. In cases where images and their captions are vectorized separately, the embeddings are concatenated.

After embedding the entire dataset and normalizing each vector to unit length, we assess the quality of the embedding vectors by retrieving them and calculating ranking metrics. More specifically, we iterate over the vector space and retrieve each vector's k (=10) nearest neighbors based on cosine similarity. Cosine similarity measures and quantifies the angle between two vectors, derived from a dot product calculation.

For the retrieved vectors, we calculate ranking metrics using Torchmetrics. We focus primarily on Mean Reciprocal Rank (MRR) and Normalized Discounted Cumulative Gain (NDCG), both of which you can read more about here. But we also use other information retrieval metrics like Mean Average Precision (MAP), Precision@k, and Recall@k, which are explained in detail here. In all of these metrics, the better the ranking of relevant items/hits, the more effective the retrieval.

Now that we understand the basics, let's dive into each of our embedding experiments and their results. Afterwards, we'll put these results side by side to compare them.

In experiment 1, we vectorized image captions using the Sentence-Transformers library, selecting top-performing models suited to our use case from a Pretrained Models Leaderboard as well as the Sentence Transformers library. In addition to using different models, we tried different ways of processing our textual data in different types of runs:

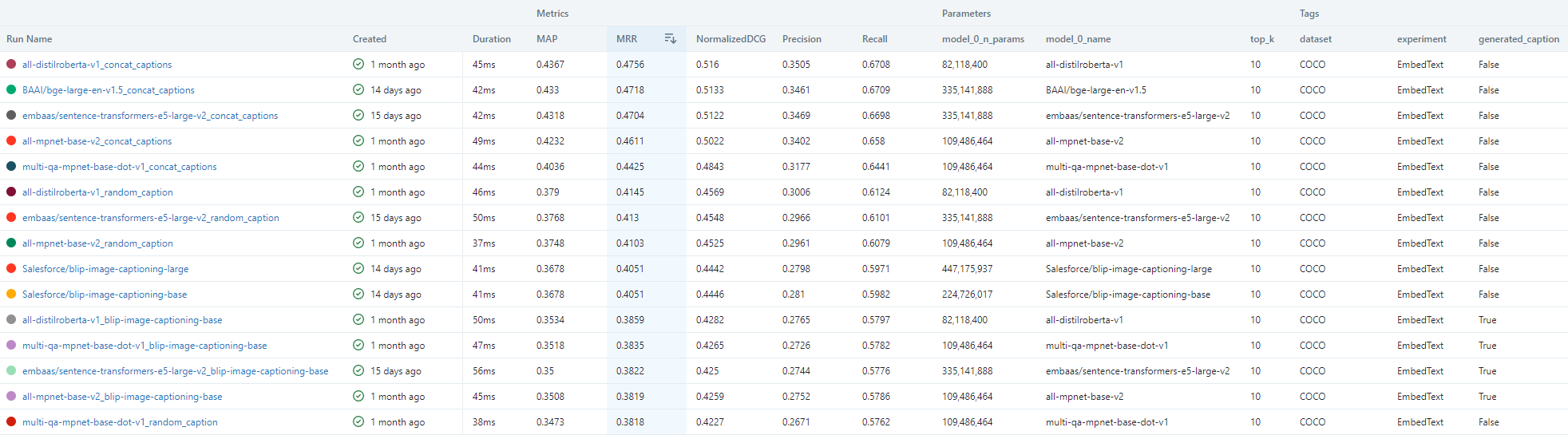

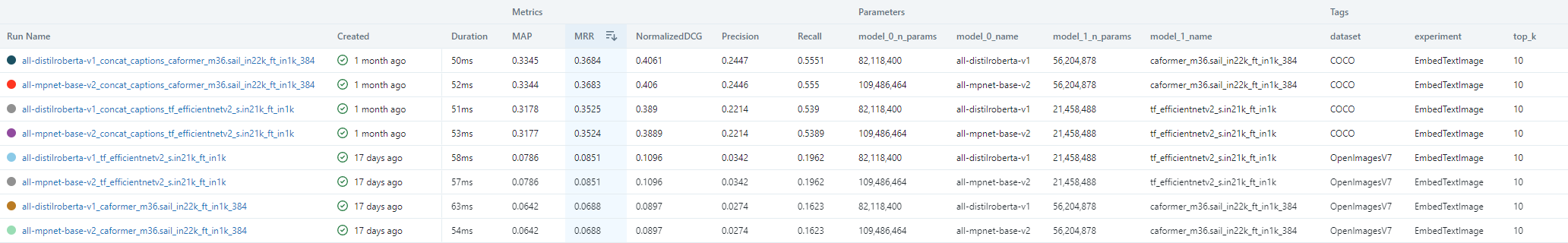

We collected the results of experiment 1 in the table below, which presents the Run Name, metrics values, number of model parameters, model name, and top k retrieved vectors for evaluation, as well as a flag to indicate whether the caption is generated or not.

Concatenating the 5 human-written captions yielded the best results. The "all-distilroberta-v1," "bge-large-en-v1.5", and "e5-large-v2" models performed comparably well on MRR and NDCG metrics. Using a randomly chosen caption produced the second best outcomes on average. Generating new captions with BLIP produced the lowest MRR scores.

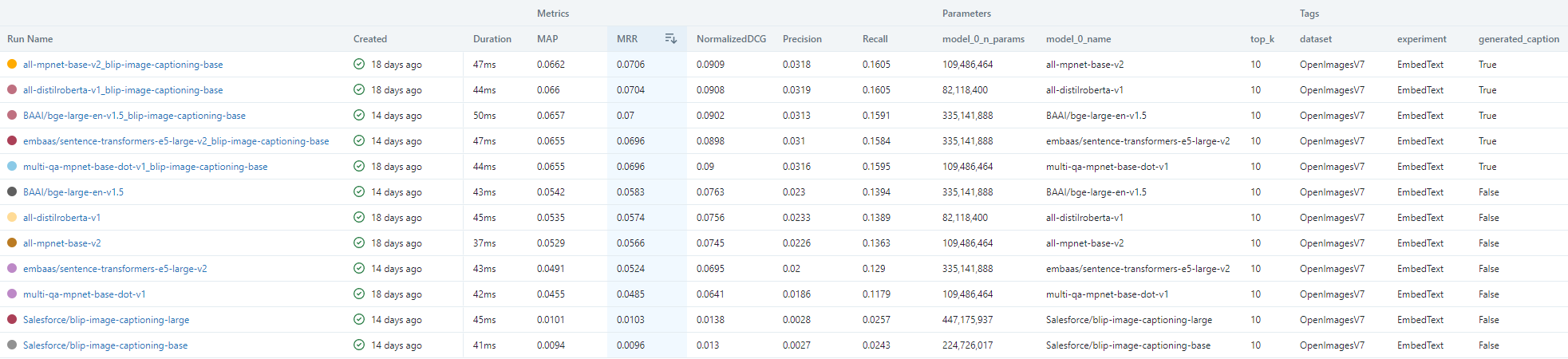

But do these outcome patterns hold true for the more diverse Open Images V7 dataset, which features a broader range of objects and more descriptive Localized Narratives for each image? Let's take a look in the table below.

BLIP-generated captions were more efficient than human Localized Narratives on the Open Images V7 dataset. The "all-distilroberta-v1," "bge-large-en-v1.5", and "e5-large-v2" models maintained their relative performance order, but the "all-mpnet-v2" model did better, with the top MRR score: 0.0706. Overall, all the models performed comparably on generated captions, with slight variations attributable to Approximate Nearest Neighbor Search using FAISS.

When we used LLaVA 1.5 to generate detailed descriptions for each image, the model tended to hallucinate non-existing objects at least 50% of the time. Performance improved when prompting for detailed descriptions of only those elements LLaVA 1.5 was confident about, but the model's one to two short sentence output was no better than BLIP's output. We also looked at GPT-4, which performed well for all tested images. But GPT-4's current API limit means that it would take an estimated 2 weeks to re-caption an entire dataset, making it impractical.

In sum, the Sentence Transformers models performed consistently across diverse datasets in our first experiment. In addition, generating captions with BLIP seems to be a viable option, especially when the captions provide a detailed description of each image. However, in use cases requiring descriptions that focus on the overall concept, and such fine granularity isn't necessary, BLIP-generated captions may unnecessarily reduce the system's retrieval capabilities.

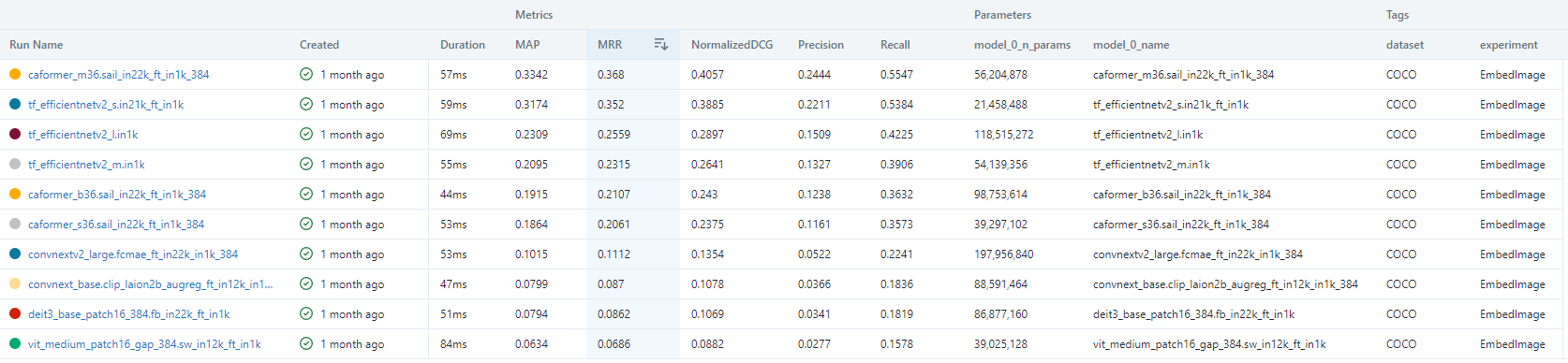

In our second experiment, we used PyTorch Image Models (timm) to embed each image, and evaluated image embedding exclusively, looking at how an increase in the number of model parameters impacts the quality of the embeddings and subsequent performance. We selected our models from within the timm repository of ImageNet leaderboard. We compared different sizes within the EfficientNetV2 family, and included a Vision Transformer (ViT) and its variants for contrast. First, let's look at notable COCO dataset results.

On the COCO dataset, the caformer_m36 model, which has approximately 56 million parameters, achieved the highest efficiency with an MRR score of 0.368. The next most efficient models were the EfficientNetv2 family. Its smallest model, with around 21.5 million parameters, had the second highest MMR score, at 0.352. Now, let's see how the models performed on the Open Images 7 dataset.

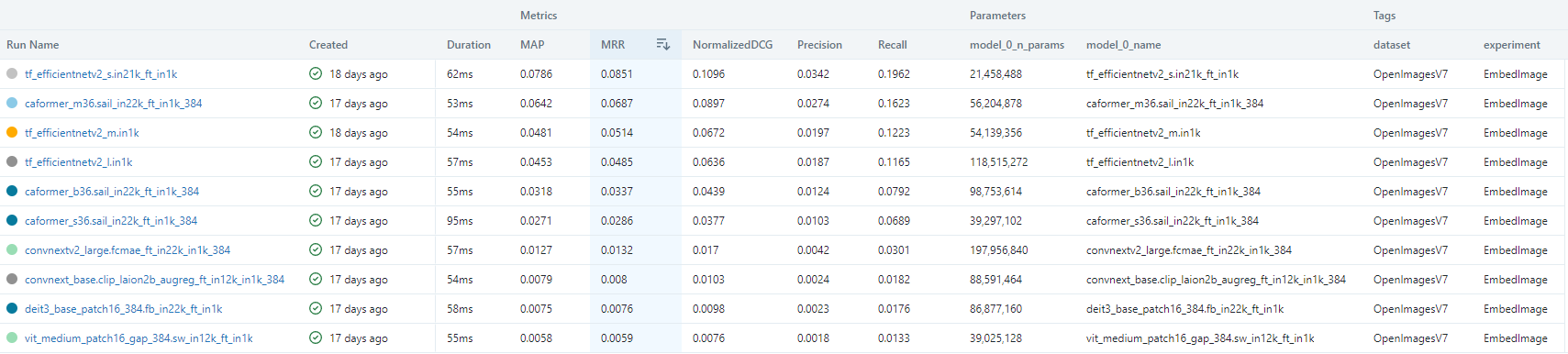

The smallest EfficientNetv2 model was the most efficient performer on the Open Images V7 dataset, caformer_m36 came second, followed by the EfficientNetv2 model, sizes m and l. The models' performance relative to each other remained roughly consistent across datasets. Also, though we expected superior performance from the Data-efficient Image Transformer models (DeiTs) because of their inductive biases (acquired through knowledge distillation), of all the models we tested on both datasets, DeiTs performed the most poorly.

Our third experiment concatenated vectors from our first two experiments into a combined vector space. We iterated through this space to retrieve the k nearest neighbors for each concatenated vector, with the following results.

Concatenating vectors from two unaligned vector spaces into one space - using the Sentence Transformers models on the COCO dataset, deteriorated performance to the level of the Computer Vision models. As a result, we next investigated (in experiments 4. and 5.) whether using jointly trained text and image encoders, and then concatenating their vectors, might lead to better performance than concatenating vectors created by separately trained image and text encoders.

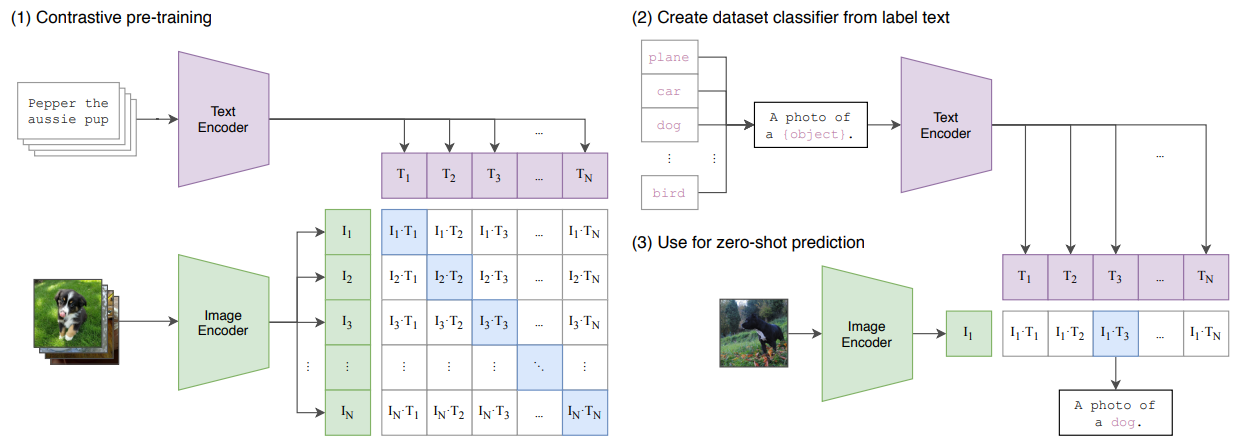

In experiment 4, we look at the performance of models based on Contrastive Language-Image Pretraining (CLIP). CLIP models employ separate but jointly trained Text and Image encoders to create a single multimodal embedding space. Regardless of whether the embeddings in this space represent text or image, if they are semantically similar, they are positioned closer together.

CLIP's high level architecture (above), from Learning Transferable Visual Models From Natural Language Supervision.

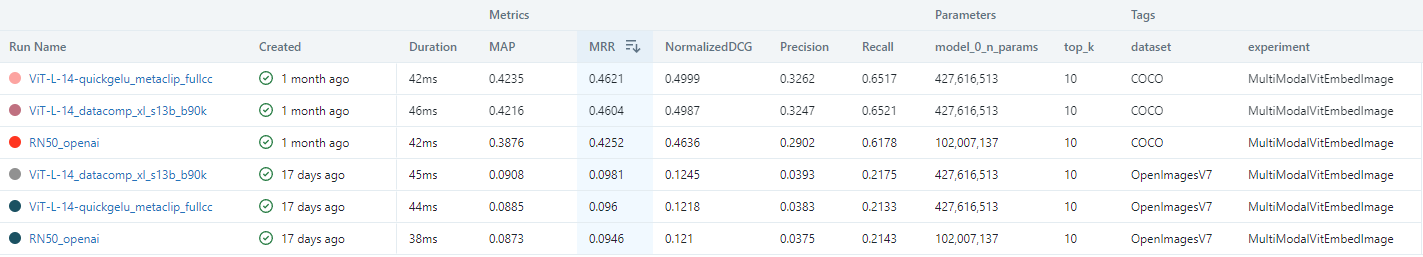

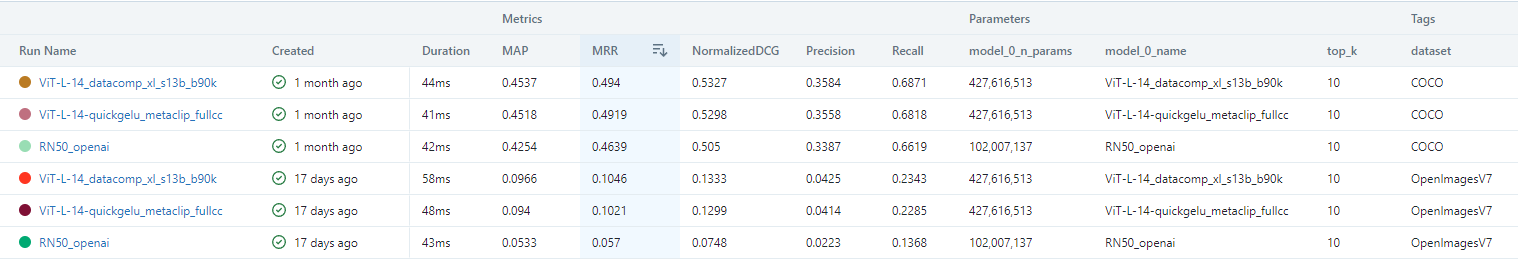

The structure of CLIP encoders (image above) makes them versatile and adaptable to various model architectures for embedding text or image data. In our experiment, we used pretrained models from the OpenClip leaderboard, and applied the Image Encoder to embed the images. Then we evaluated the outcomes.

The performance of the tested models was consistent across both datasets. ViT-based models outperformed the ResNet50-based model on COCO. On the Open Images V7 dataset, the difference between ViT and ResNet50 (RN50_openai) was less significant, despite ViT models having more than 4 times as many parameters. We also present results (below) from BLIP, which encodes images using a ViT model.

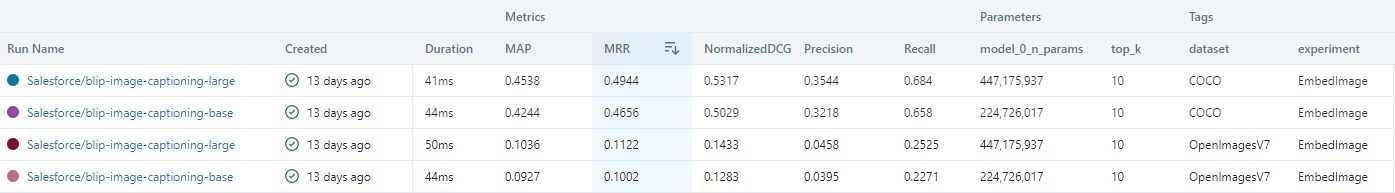

BLIP achieved the best MRR scores on both datasets, surpassing the OpenCLIP models, aligning with findings of the BLIP paper. The larger of the two BLIP models, with 447 million parameters (the base model has 224.7 million), reached notable MRR scores of 0.494 on COCO and 0.112 on Open Images V7.

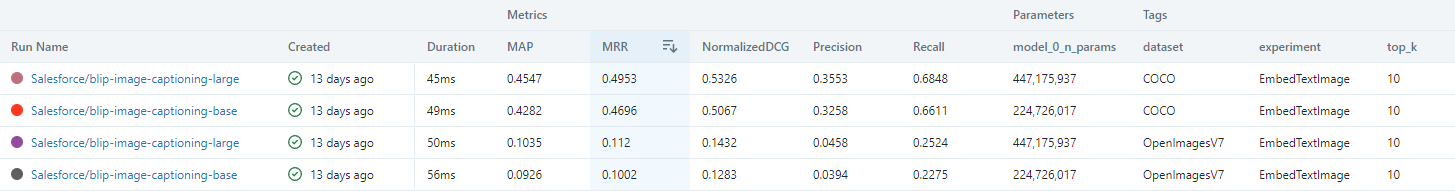

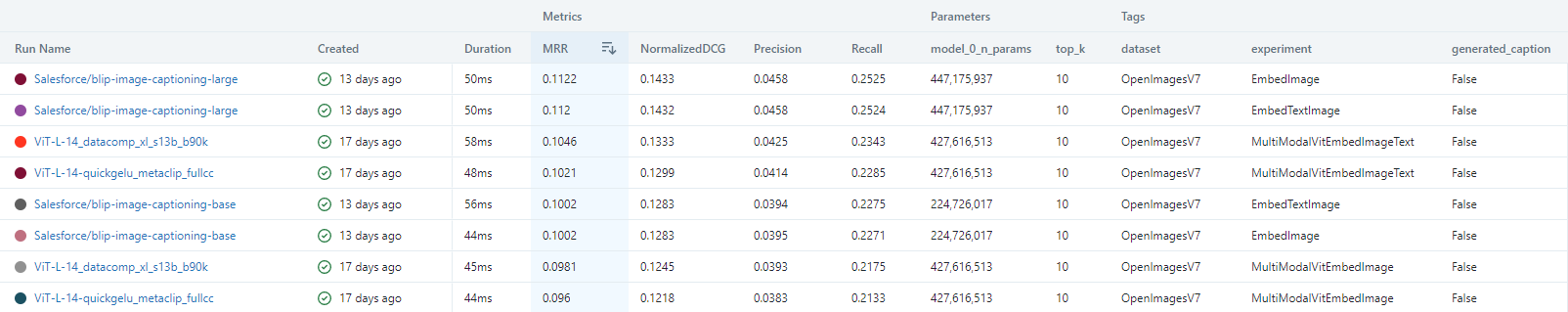

In our final experiment, we used Text and Image encoders from both CLIP and BLIP models to encode captions and images separately, then concatenated the resulting embeddings. A key difference from our third experiment (embedding both images and their captions) is that, here, the encoders have undergone joint pre-training - in the case of CLIP, or the embeddings of the encoders have been aligned with additional layers - in the case of BLIP.

In experiment 5, the rank order of the two ViT-based OpenCLIP models on the COCO dataset was inverted (from what it was in experiment 4), but they performed comparably well - on both the COCO and Open Images V7 datasets. In the BLIP experiments (below), the BLIP models once again proved to be more efficient; the largest model had an MRR score of 0.4953 on the COCO dataset - marginally (0.26%) better than the best OpenCLIP model, and 0.112 on Open Images V7 - 7.07% better than the best OpenCLIP model.

Here, as we anticipated, concatenating embeddings from two jointly trained or aligned encoders boosted retrieval performance, over and above the results achieved by concatenating vectors created by separately trained image and text encoders (in experiment 4). This boost was more pronounced for the OpenCLIP models.

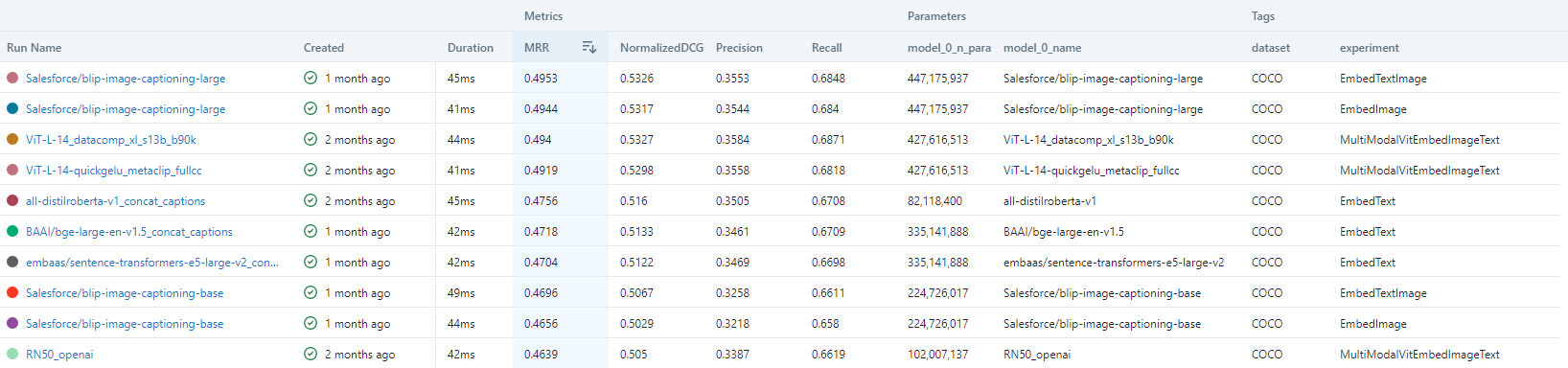

Now, let's put all our results side by side for comparison.

In both the COCO and Open Images V7 datasets, the BLIP and OpenCLIP models proved to be the most efficient feature extractors. On the COCO dataset, the BLIP model performed about the same using only image embeddings as it did when using both image and caption embeddings. Indeed, in general, using both image and caption embeddings makes the highest performing models perform only marginally better. The top Sentence Transformers models' MRR scores trailed by about 2%, but their inference speed was significantly faster. However, on the Open Images V7 dataset, Sentence Transformers models' proportional MRR scores lagged behind the other models by around -37%.

We should also take into account the inference time and GPU demands for each of our experiments. These metrics were gathered using an RTX 3080 16 GB GPU, capable of 29.77 TFLOPS on FP32. When processing the merged COCO training and validation dataset, containing 103,429 data samples post-preprocessing, we noted the following inference times and resource allocations. It's important to note that GPU utilization was always maximized through parallelized data loading to ensure efficiency.

If high-quality image captions are already in hand, embedding with Sentence Transformers proves to be highly efficient, and balances speed and effectiveness. On the other hand, if only images are available and your application or project also requires captions to be generated, the time cost of different methods should be considered carefully.

The outcomes of these experiments open up several intriguing questions for further investigation. Here are a few key areas to explore:

Our experiments demonstrate that Transformer models are highly effective feature extractors for both text and image data. State-of-the-art image-captioning models have proven to be excellent in annotating images and ensuring consistency across similar concepts. Vision Transformers emerge as robust feature encoders for image data. Moreover, using jointly trained text and image encoders appears to offer significant advantages in data embedding tasks involving multiple modalities, compared to using separately trained encoders alone and/or then combining them. Typically, BLIP and OpenCLIP models serve as reliable options for embedding data that involves both image and text modalities.

Stay updated with VectorHub

Continue Reading