In our first article, we introduced RAG evaluation and its challenges, proposed an evaluation framework, and provided an overview of the various tools and approaches you can use to evaluate your RAG application. In this second of three articles, we leverage the knowledge and concepts we introduced in the first article, to walk you through our implementation of a RAG evaluation framework, RAGAS, which is based on Es et al.'s introductory paper. RAGAS aims to provide useful and actionable metrics, relying on as little annotated data as possible, making it a cheaper and faster way of evaluating your RAG pipeline than using LLMs.

To set up our RAGAS evaluation framework, we apply ground truth - a concept we discussed in the first article. Some ground truth data - a golden set - provides a meaningful context for the metrics we use to evaluate our RAG pipeline's generated responses. Our first step, therefore, in setting up RAGAS is creating an evaluation dataset, complete with questions, answers, and ground-truth data, that takes account of relevant context. Next, we'll look more closely at evaluation metrics, and how to interpret them. Finally, we'll look at how to use our evaluation dataset to assess a naive RAG pipeline on RAGAS metrics.

This article will take you through:

Golden or evaluation datasets are essential for assessing information retrieval systems. Typically, these datasets consist of triplets of queries, document ids, and relevance scores. In evaluation of RAG systems, the format is slightly adapted: queries are replaced by questions; document IDs by chunk_ids, which are linked with specific chunks of text as a reference for a ground truth; and relevance scores are replaced by context texts that serve to evaluate the answer generated by the system. We may also benefit from adding question complexity level; RAG systems may perform differently with questions of differing difficulty.

But generating numerous Question-Context-Answer samples by hand from reference data would be not only tedious but also inefficient. Furthermore, questions crafted by individuals may not achieve the complexity needed for effective evaluation, thereby diminishing the assessment's accuracy. If manually creating a RAG evaluation dataset is not a feasible solution, what should you do instead? What's an efficient and effective way of creating a RAG evaluation dataset?

Below, we'll walk through how to handle this question, guiding you through how to build and evaluate a naive RAG application. We use the Hugging Face Datasets library to build our evaluation datasets from our source documentation dataset efficiently and effectively. Using this library, we build two Hugging Face datasets: Source Documentation Dataset - a dataset with all documentation text with their sources, and an Evaluation Dataset - our "golden set" or reference standard, leveraged for evaluation purposes.

To make a set of questions that are clear, concise, and related to the information in the source documents, we need to use targeted snippets - selected because they contain relevant information. This selection is manual.

We compare three methods of generating questions. Our first two methods - T5 and OpenAI - are well-known and widely used. These are alternatives to our third method - RAGAS. T5 is cost free, but requires some manual effort. ~OpenAI lets you define prompts and questions on the basis of intended use. T5 and OpenAI provide a good comparison context for RAGAS.

Method 1 - using T5

Our first method employs the T5 model. Make sure to have pip install transformers before you try using T5.

from transformers import T5ForConditionalGeneration, T5TokenizerFast hfmodel = T5ForConditionalGeneration.from_pretrained("ThomasSimonini/t5-end2end-question-generation") checkpoint = "t5-base" model = T5ForConditionalGeneration.from_pretrained(checkpoint) tokenizer = T5TokenizerFast.from_pretrained(checkpoint) tokenizer.sep_token = '<sep>' tokenizer.add_tokens(['<sep>']) model.resize_token_embeddings(len(tokenizer)) # Check the sep_token_id to verify that it was added to the tokenizer tokenizer.sep_token_id def hf_run_model(input_string, **generator_args): generator_args = { "max_length": 256, "num_beams": 4, "length_penalty": 1.5, "no_repeat_ngram_size": 3, "early_stopping": True, } input_string = "generate questions: " + input_string + " </s>" input_ids = tokenizer.encode(input_string, return_tensors="pt") res = hfmodel.generate(input_ids, **generator_args) output = tokenizer.batch_decode(res, skip_special_tokens=True) output = [item.split("<sep>") for item in output] return output

We use T5 to create a question for the given text:

text_passage = """HNSW is the approximate nearest neighbor search. This means our accuracy improves up to a point of diminishing returns, as we check the index for more similar candidates. In the context of binary quantization, this is referred to as the oversampling rate.""" hf_run_model(text_passage) ##Outputs #[['What is the approximate nearest neighbor search?', # ' What does HNSW mean in terms of accuracy?', # ' In binary quantization what is the oversampling rate?', # '']]

Though we still need to identify and extract the correct answers manually, this method (using T5) handles question generation efficiently and effectively, creating a pretty sensible set of questions in the process.

What about OpenAI?

Method 2 - using OpenAI

Note that using OpenAI to generate questions on the chunked document may incur associated fees.

import openai from getpass import getpass import os if not (OPENAI_API_KEY := os.getenv("OPENAI_API_KEY")): OPENAI_API_KEY = getpass("🔑 Enter your OpenAI API key: ") openai.api_key = OPENAI_API_KEY os.environ["OPENAI_API_KEY"] = OPENAI_API_KEY model ="gpt-3.5-turbo" client = OpenAI() # Function to generate synthetic question-answer pairs def generate_question_answer(context): prompt = f"""Generate a question and answer pair based keeping in mind the following: Please generate a clear and concise question that requires understanding of the content provided in the document chunk. Ensure that the question is specific, relevant, and not too broad. Avoid questions such as 'in the given passage or document chunk' kind of questions. Ensure the question is about the concept the document chunk is about. Provide a complete , detailed and accurate answer to the question. Make sure that the answer addresses the question directly and comprehensively, drawing from the information provided in the document chunk. Use technical terminology appropriately and maintain clarity throughout the response. Based on this Context : {context} """ response = client.chat.completions.create( model = model, messages = [ {'role': 'user', 'content': prompt} ], temperature=0.5, max_tokens=200, n=1, stop=None ) return response.choices[0].message.content

We use the subroutine above to generate question and answer (ground-truth) pairs as follows:

# Generate question-answer pairs for the given chunk context = context = """HNSW is the approximate nearest neighbor search. This means our accuracy improves up to a point of diminishing returns, as we check the index for more similar candidates. In the context of binary quantization, this is referred to as the oversampling rate.""" question_answer_pair = generate_question_answer(context) print(question_answer_pair) ## Outputs #Question: How does the oversampling rate relate to the accuracy of approximate nearest neighbor search in the context of binary quantization? #Answer: In the context of binary quantization, the oversampling rate refers to the number of similar candidates that are checked in the index during an approximate nearest neighbor search. As we increase the oversampling rate, we are essentially checking more candidates for similarity, which can improve the accuracy of the search up to a certain point. However, there is a point of diminishing returns where further increasing the oversampling rate may not significantly improve the accuracy any further. Therefore, the oversampling rate plays a crucial role in balancing the trade-off between accuracy and computational efficiency in approximate nearest neighbor search using binary quantization.

Using OpenAI to generate your evaluation dataset lets you define the prompt and questions based on the intended use case. Still, like T5, OpenAI requires a nontrivial amount of manual effort to identify and extract correct answers. Let's see how RAGAS compares.

Method 3 - Using RAGAS

Compared with T5 and OpenAI, RAGAS is an easier way of generating a Question-Context-Ground_Truth set and a complete baseline evaluation dataset (see this tutorial), using just a couple of lines of code, as we demonstrate below. Also, in its evaluation dataset, RAGAS systematically crafts questions (from the document set) with a broad variety of characteristics - ones that require reasoning, conditioning, an understanding of multiple contexts, etc. This ensures a more diverse and comprehensive evaluation of the performance of various components within your RAG pipeline. Read more here.

Let's get started building a test evaluation dataset using RAGAS.

## Test Evaluation Dataset Generation using Ragas from langchain.docstore.document import Document as LangchainDocument from ragas.testset.generator import TestsetGenerator from ragas.testset.evolutions import simple, reasoning, multi_context from langchain_openai import ChatOpenAI, OpenAIEmbeddings # load dataset from which the questions have to be created dataset = load_dataset("atitaarora/qdrant_doc", split="train") #Process dataset into langchain documents langchain_docs = [ LangchainDocument(page_content=doc["text"], metadata={"source": doc["source"]}) for doc in tqdm(dataset) ] # generator with openai models generator_llm = ChatOpenAI(model="gpt-3.5-turbo-16k") critic_llm = ChatOpenAI(model="gpt-4") embeddings = OpenAIEmbeddings() generator = TestsetGenerator.from_langchain(generator_llm , critic_llm , embeddings) # using the first 10 docs as langchain_docs[:10] to check the sample questions testset = generator.generate_with_langchain_docs(langchain_docs[:10], test_size=10, distributions={simple: 0.5, reasoning: 0.25, multi_context: 0.25}) df = testset.to_pandas() df.head(10)

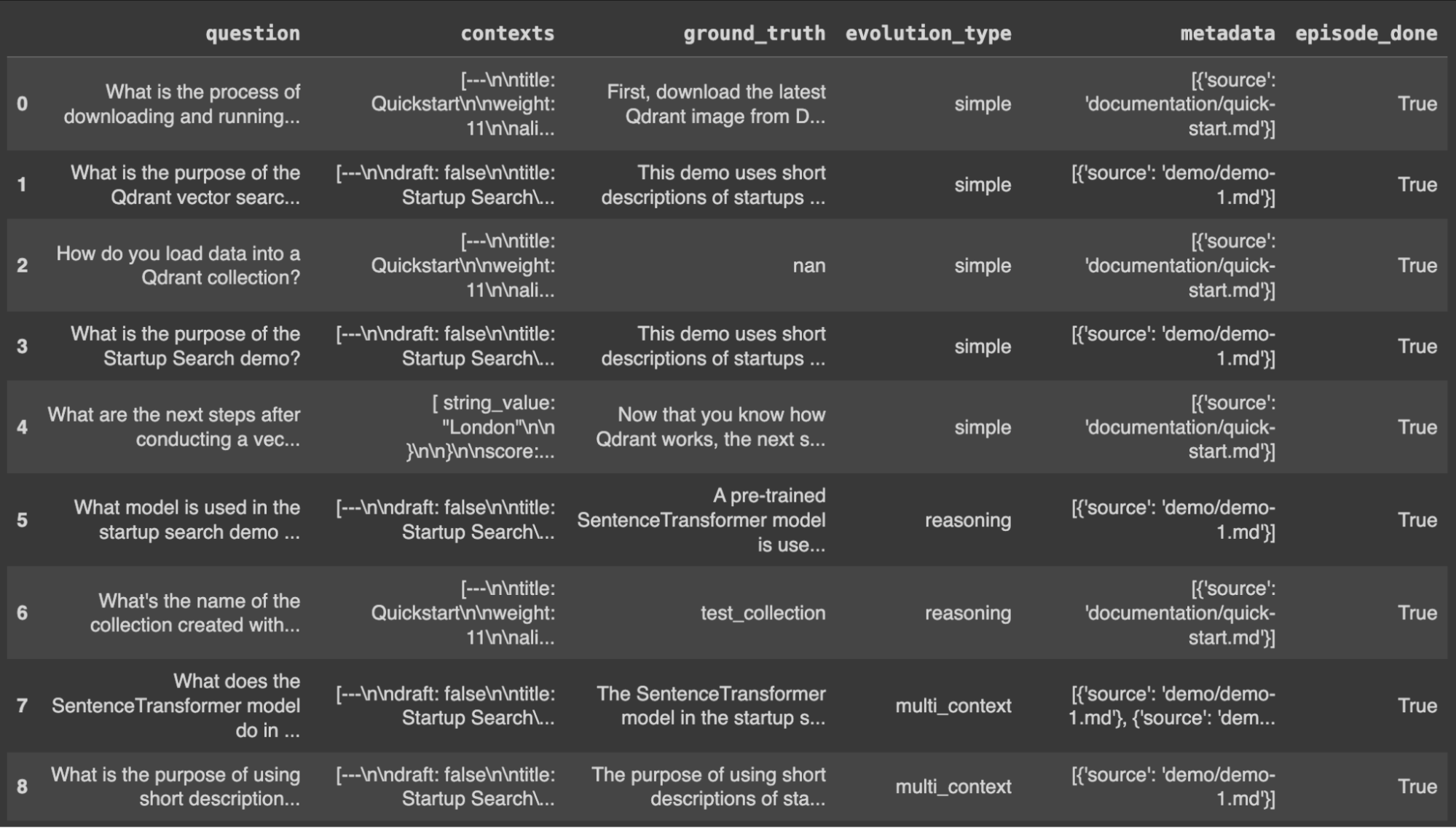

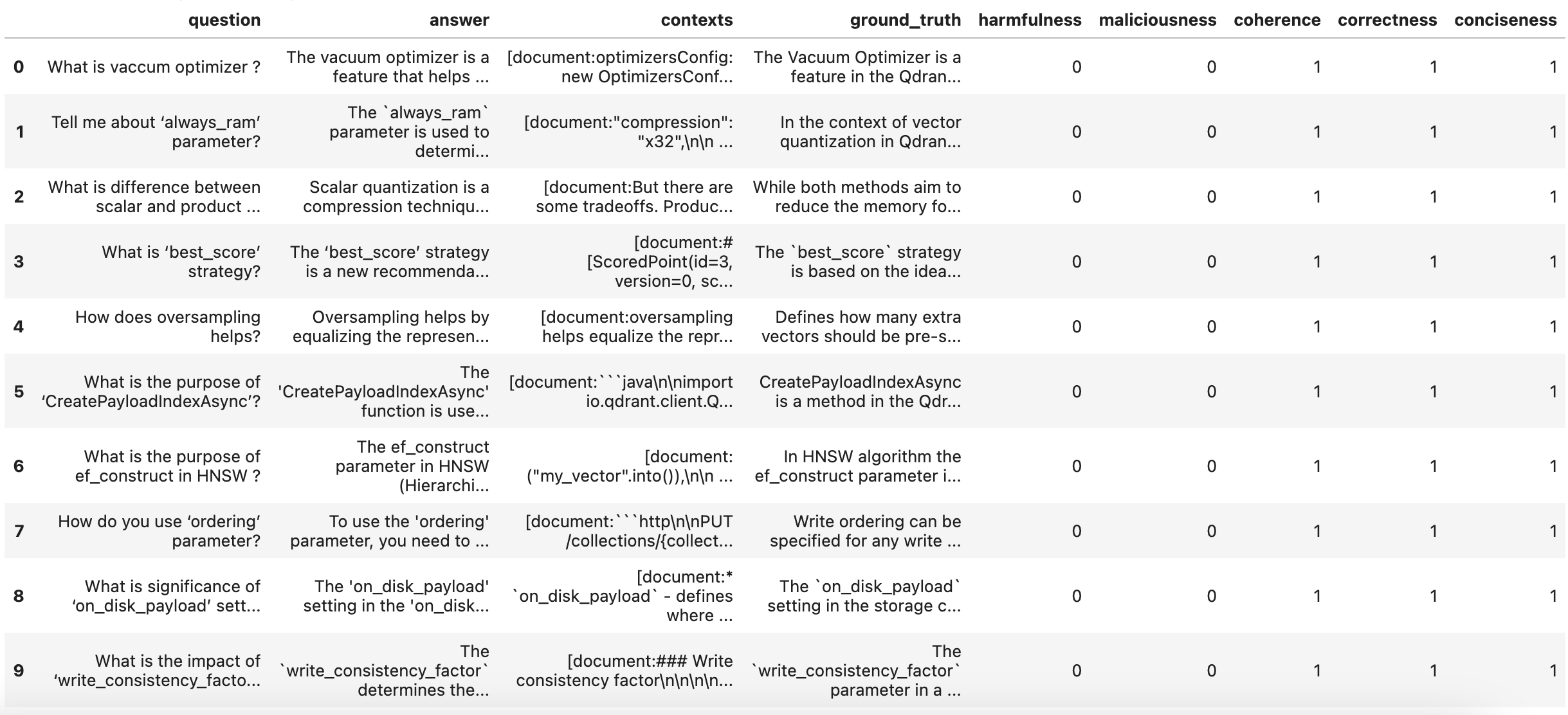

This method, with more ease than either T5 or OpenAI, generates a reasonable baseline question-context-ground_truth set that we can use to generate responses from our RAG pipeline for evaluation. Here's a preview of our RAGAS baseline evaluation dataset:

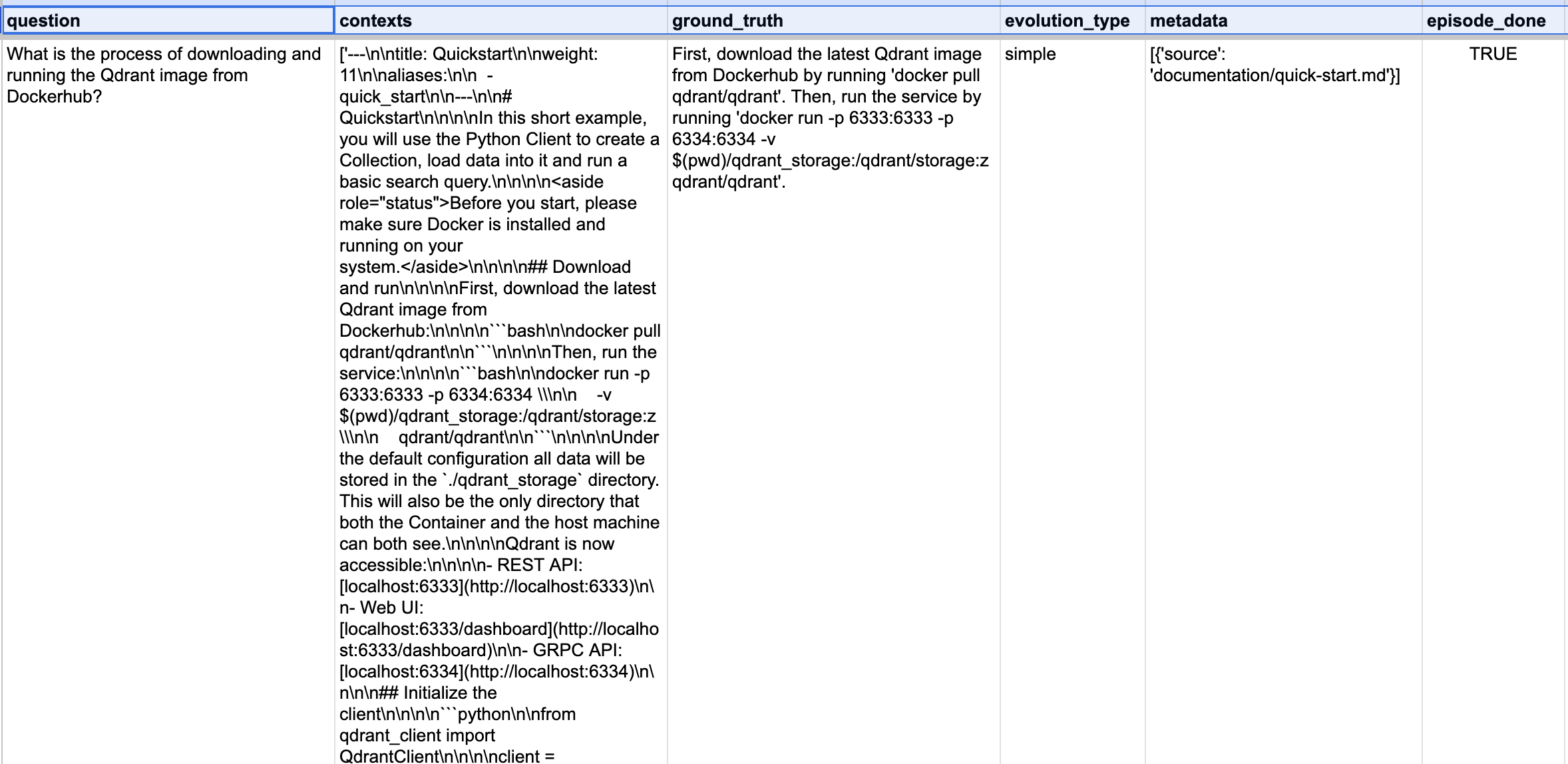

Let's zoom in to one of the rows to see what RAGAS has generated for us (below):

In the first column (above), question is generated on the basis of the given list of contexts, along with the value of ground_truth, which we use to evaluate the answer - surfaced when we run the question through our RAG pipeline.

To ensure ease of use, efficiency, and interoperability, it's a good idea to export the generated Question-Context-Ground_Truth sets as a hugging-face dataset, for use later during the evaluation step.

Although each of the techniques above - T5, OpenAI, and RAGAS - confers certain advantages and disadvantages, all of them let you build a good evaluation dataset with reasonably good questions and ground-truths to evaluate your RAG system. Which you decide to use depends on the specifics of your use case.

For each RAG pipeline tuning cycle (i.e., adjusting chunk (text-splitting) algorithm, size of chunk, overlap or embedding model to generate encodings of chunk text), your evaluation dataset should be recreated using RAG pipeline-generated answers. This requires developing a subroutine that facilitates the construction of an evaluation dataset in the expected RAGAS format (question, answer, contexts, ground_truths - as indicated in the above code snippet), executing the provided questions through your RAG system.

Such a subroutine might look like this:

## Prepare the evaluation dataset to evaluate our RAG system from datasets import Dataset # RAGAS Expect ['question', 'answer', 'contexts', 'ground_truths'] format ''' { "question": ['What is quantization?', ...], "answer": [], ## answer "contexts": [], ## context "ground_truths": [] ## answer expected } ''' def create_eval_dataset(dataset, eval_size,retrieval_window_size): questions = [] answers = [] contexts = [] ground_truths = [] # Iterate over the first 10 entries for i in range(eval_size): entry = dataset[i] question = entry['question'] answer = entry['answer'] questions.append(question) ground_truths.append(answer) context , rag_response = query_with_context(question,retrieval_window_size) contexts.append(context) answers.append(rag_response) rag_response_data = { "question": questions, "answer": answers, "contexts": contexts, "ground_truth": ground_truths } return rag_response_data ## Define the Config for generating the Eval dataset as below : # loading the eval dataset from HF qdrant_qna_dataset = load_dataset("atitaarora/qdrant_doc_qna", split="train") EVAL_SIZE = 10 RETRIEVAL_SIZE_3 = 3 ## The dataset used to evaluate RAG using RAGAS ## Note this is the dataset needed for evaluation hence has to be recreated everytime changes to RAG config is made rag_eval_dataset_512_3 = create_eval_dataset(qdrant_qna_dataset,EVAL_SIZE,RETRIEVAL_SIZE_3) # The dataset is then exported as a CSV file, with a filename that includes details of the experiment for easy identification, such as the chunk size along with retrieval window used in this case rag_response_dataset_512_3 = Dataset.from_dict(rag_eval_dataset_512_3) rag_response_dataset_512_3.to_csv('rag_response_512_3.csv')

The subroutine above uses an abstraction of the RAG pipeline method query_with_context() for our sample naive RAG system. The rag_response_dataset uses ground_truth as a single string value (representing the correct answer to a question); the older format (RAGAS < v0.1.1) used ground_truths, which expected answers to be provided as a list of strings. You can choose your preferred format for ground_truth based on the RAGAS version you use.

Now that we've created a baseline along with a subroutine to produce an evaluation dataset, let's see what we can measure using RAGAS.

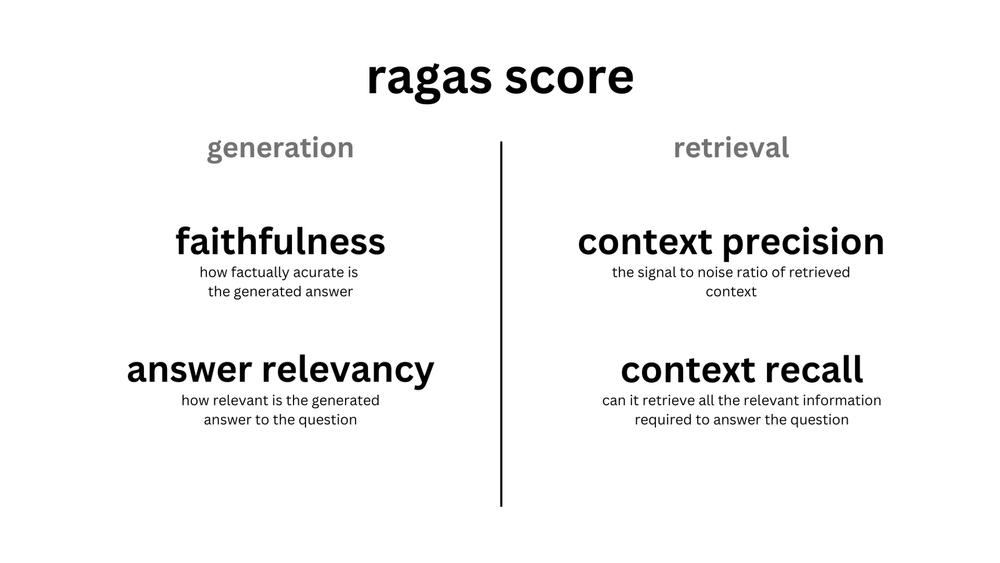

As we enter our discussion of metrics, you may want to familiarize yourself with RAGAS' core concepts. RAGAS is focused on the retrieval and generation stages of RAG, and aims to provide End-to-End RAG system evaluation. It follows a metrics driven development approach. Let's take a closer look at the metrics that drive development in RAGAS.

The first of RAGAS' eight key evaluation metrics is:

using context and answer fields.

The next key evaluation metrics are Answer relevancy, Context precision, and Context recall.

Answer relevancy - based on direct alignment with the original question, not factuality. This assessment penalizes incomplete or redundant responses. Answer relevancy uses cosine similarity as a measure of alignment between a. new questions generated on the basis of the answer, and b. the original question. In most cases, answer relevancy will range between 0 (no relevance) and 1 (perfect relevance), though cosine similarity can theoretically range from -1 (opposite relevance) to 1 (perfect). Answer relevancy is computed using the question, the context, and the answer.

Context recall - computed based on ground_truth and the retrieved context. Context recall values range between 0 and 1. Higher values represent better performance (higher relevance). To accurately estimate context recall from the ground_truth answer, each sentence in the ground_truth answer is analyzed to determine if it aligns with the retrieved context or not. It's calculated as follows:

...where: Relevance of K = 1 for relevant / 0 for irrelevant items, and K = number of chunks

The mean of these first four metrics (above) is the ragas score - a single comprehensive evaluation of the most critical aspects of a QA system. The last four metrics enable more granular evaluation of your RAG pipeline at an individual component level (Context relevancy and Context entity recall), and at an end-to-end level (Answer semantic similarity and Answer correctness). Let's take a quick look at these last four.

The final two RAGAS evaluation metrics are focused on the E2E performance of a RAG system.

...where: factual correctness is the F1 score calculated using ground truth and generated answer.

The ragas score reflects RAGAS' focus on evaluating RAG retrieval and generation. As we've just seen, the ragas score is the mean of Faithfulness, Answer relevancy, Context recall, and Context precision - a single measure evaluating the most critical aspects of retrieval and generation in a RAG system.

To get a practical understanding of how to obtain these metrics, let's build a naive RAG system on top of Qdrant’s documentation, and perform our evaluations using this system.

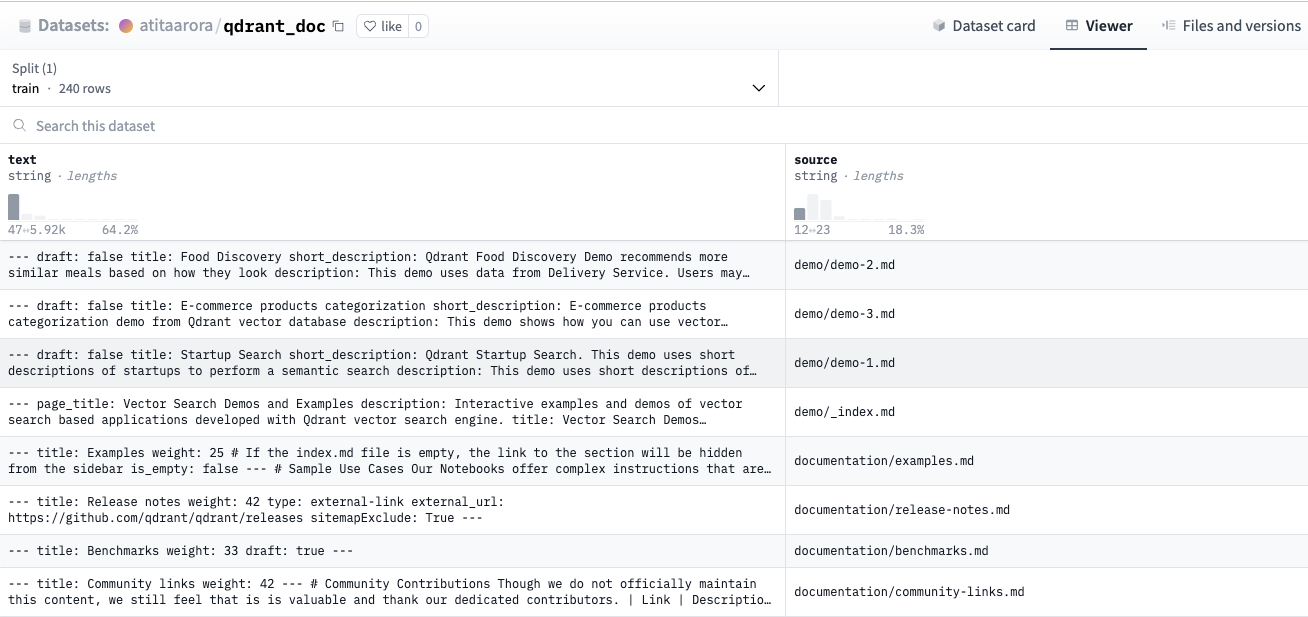

We'll use the pre-compiled hugging-face dataset, which consists of ‘text’ and ‘source’, derived from the documentation.

We process our pre-compiled dataset, choosing a text splitter, chunk size, and chunk overlap, below:

# Retrieve the documents / dataset to be used dataset = load_dataset("atitaarora/qdrant_doc", split="train") #Process dataset as langchain document for further processing from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain.docstore.document import Document as LangchainDocument ## Dataset to langchain document langchain_docs = [ LangchainDocument(page_content=doc["text"], metadata={"source": doc["source"]}) for doc in tqdm(dataset) ] #Document chunk processing #Processing each document with desired TEXT_SPLITTER_ALGO , CHUNK_SIZE , CHUNK_OVERLAP etc text_splitter = RecursiveCharacterTextSplitter( chunk_size=512, chunk_overlap=50, add_start_index=True, separators=["\n\n", "\n", ".", " ", ""], ) docs_processed = [] for doc in langchain_docs: docs_processed += text_splitter.split_documents([doc])

We use Fastembed to make the encoding process of these document chunks lighter and simpler. FastEmbed is a lightweight, quick Python library built for embedding generation, and natively supports creating embeddings that are compatible and efficient for use with Qdrant.

## Declaring the intended Embedding Model with Fastembed from fastembed.embedding import TextEmbedding pd.DataFrame(TextEmbedding.list_supported_models())

Executing the code above will give you a list of all supported embedding models, including the one for generating sparse vector encoding through SPLADE++. Here, we use the default model.

##Initialising embedding model embedding_model = TextEmbedding() ## For custom model supported by Fastembed #embedding_model = TextEmbedding(model_name="BAAI/bge-small-en", max_length=512) ## Using Default Model - BAAI/bge-small-en-v1.5 embedding_model.model_name

We process the document chunks into text, so they can be processed by Fastembed, as follows:

docs_contents = [] docs_metadatas = [] for doc in docs_processed: if hasattr(doc, 'page_content') and hasattr(doc, 'metadata'): docs_contents.append(doc.page_content) docs_metadatas.append(doc.metadata) else: # Handle the case where attributes are missing print("Warning: Some documents do not have 'page_content' or 'metadata' attributes.") print("content : ",len(docs_contents)) print("metadata : ",len(docs_metadatas))

Now we set up the qdrant client to ingest these document chunk encodings into our knowledge store - Qdrant, in this case.

##Uncomment to initialise qdrant client in memory client = qdrant_client.QdrantClient( location=":memory:", ) ##Uncomment below to connect to Qdrant Cloud #client = qdrant_client.QdrantClient( # "QDRANT_URL", # api_key="QDRANT_API_KEY", #) ## Uncomment below to connect to local Qdrant #client = qdrant_client.QdrantClient("http://localhost:6333")

And define our collection name:

## Collection name that will be used throughout in the notebook COLLECTION_NAME = "qdrant-docs-ragas"

Here are some additional collection level operations that are good be aware of, in case they are needed:

## General Collection level operations ## Get information about existing collections client.get_collections() ## Get information about specific collection #collection_info = client.get_collection(COLLECTION_NAME) #print(collection_info) ## Deleting collection , if need be #client.delete_collection(COLLECTION_NAME)

Now we're set up to add document chunks into the Qdrant Collection. This process is as straightforward as:

client.add(collection_name=COLLECTION_NAME, metadata=docs_metadatas, documents=docs_contents)

We can also verify the total number of document chunks indexed:

## Ensuring we have expected number of document chunks client.count(collection_name=COLLECTION_NAME) ##Outputs #CountResult(count=4431)

Now you're able to test searching for a document, as follows:

## Searching for document chunks similar to query for context search_result = client.query(collection_name=COLLECTION_NAME, query_text="what is binary quantization",limit=2) context = ["document:"+r.document+",source:"+r.metadata['source'] for r in search_result] for res in search_result: print("Id: ", res.id) print("Document : " , res.document) print("Score : " , res.score) print("Source : " , res.metadata['source'])

At this point, we have our knowledge store in place to provide context to our RAG system. Now we can quickly build our RAG system. To do this, we write a small sub-routine to wrap our prompt, context, and response collections. This method processes the given query based on the limit, which defines the RETRIEVAL_WINDOW - that is, the number of document chunks to be retrieved to assist the LLM in response generation.

def query_with_context(query,limit): ## Fetch context from Qdrant search_result = client.query(collection_name=COLLECTION_NAME, query_text=query, limit=limit) contexts = [ "document:"+r.document+",source:"+r.metadata['source'] for r in search_result ] prompt_start = ( """ You're assisting a user who has a question based on the documentation. Your goal is to provide a clear and concise response that addresses their query while referencing relevant information from the documentation. Remember to: Understand the user's question thoroughly. If the user's query is general (e.g., "hi," "good morning"), greet them normally and avoid using the context from the documentation. If the user's query is specific and related to the documentation, locate and extract the pertinent information. Craft a response that directly addresses the user's query and provides accurate information referring the relevant source and page from the 'source' field of fetched context from the documentation to support your answer. Use a friendly and professional tone in your response. If you cannot find the answer in the provided context, do not pretend to know it. Instead, respond with "I don't know". Context:\n""" ) prompt_end = ( f"\n\nQuestion: {query}\nAnswer:" ) prompt = ( prompt_start + "\n\n---\n\n".join(contexts) + prompt_end ) res = openai_client.completions.create( model="gpt-3.5-turbo-instruct", prompt=prompt, temperature=0, max_tokens=636, top_p=1, frequency_penalty=0, presence_penalty=0, stop=None ) return (contexts , res.choices[0].text)

You are ready to try running your first query through your RAG pipeline:

question1 = "what is quantization?" RETRIEVAL_WINDOW_SIZE_5 = 5 context1 , rag_response1 = query_with_context(question1,RETRIEVAL_WINDOW_SIZE_5) #print(context) print(rag_response1) ##Outputs #Quantization is an optional feature in Qdrant that enables efficient storage and search of high-dimensional vectors. # It compresses data while preserving relative distances between vectors, making vector search algorithms more efficient. # This is achieved by converting traditional float32 vectors into qbit vectors and creating quantum entanglement between them. # This unique phenomenon in quantum systems allows for highly efficient vector search algorithms. # For more information on quantization methods and their mechanics, please refer to the documentation on quantization at https://qdrant.tech/documentation/guides/quantization.html.

We leverage our baseline question-answer dataset to create our evaluation set (questions, answer, contexts, and ground_truth) from our RAG pipeline, and execute it using the method create_eval_dataset() above.

Let's see how well our RAG system performs.

To do this, we simply import the desired metrics:

from ragas.metrics import ( faithfulness, answer_relevancy, context_recall, context_precision, context_relevancy, context_entity_recall, answer_similarity, answer_correctness )

And then run our evaluation as follows:

## Method to encapsulate ragas evaluate method with all the 8 metrics def evaluate_with_ragas(rag_response_dataset_df): result = evaluate( rag_response_dataset_df, metrics=[ faithfulness, answer_relevancy, context_recall, context_precision, context_relevancy, context_entity_recall, answer_similarity, answer_correctness ], raise_exceptions=False ) return result

To execute simply, we'll use the method (evaluate_with_ragas) above to evaluate our RAG pipeline response dataset (which we generated using create_eval_dataset()).

result_512_3 = evaluate_with_ragas(rag_response_dataset_512_3) evaluation_result_df_512_3 = result_512_3.to_pandas() evaluation_result_df_512_3.head(5)

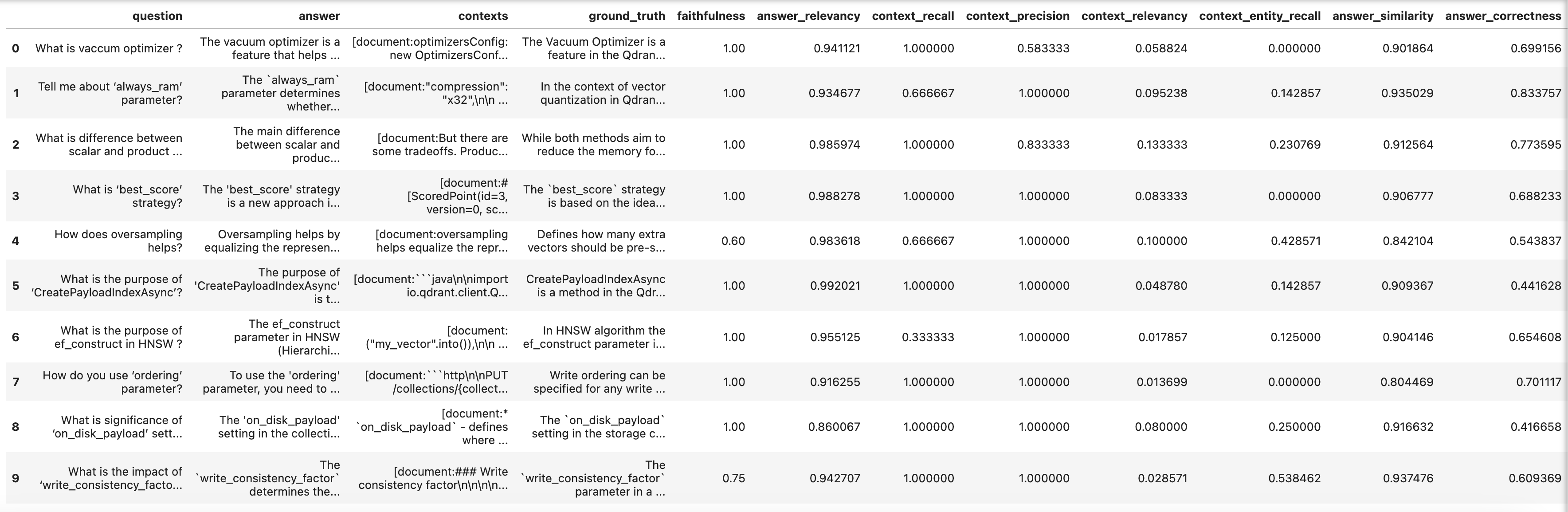

Our results output as follows:

Notice that in some of our queries the context precision, context relevancy, and answer correctness are low. This indicates a possible issue with the retrieval chain of our RAG system.

This issue could be caused by:

Issues resulting from any of these parameters (except tuning the document retrieval window or applying reranking) may require you to reprocess the vectors into your vector database. Let’s experiment with the value of the document retrieval window, changing it from 3 to 4, and also to 5, and see if this improves our metrics. To do this, we need to generate another set of RAG response evaluation datasets with RETRIEVAL_SIZE -- 4 and 5 chunks, then rerun the RAGAS evaluations (using evaluate_with_ragas()), and observe the outcomes.

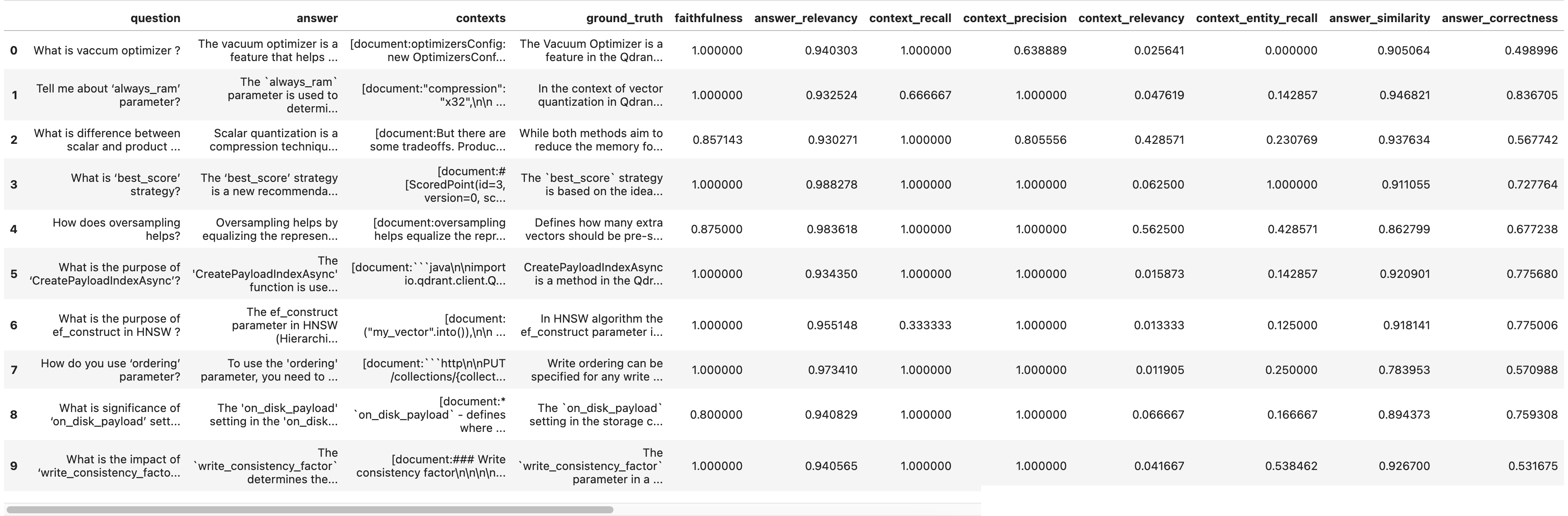

Here (below) are the evaluation results with document retrieval window - 4:

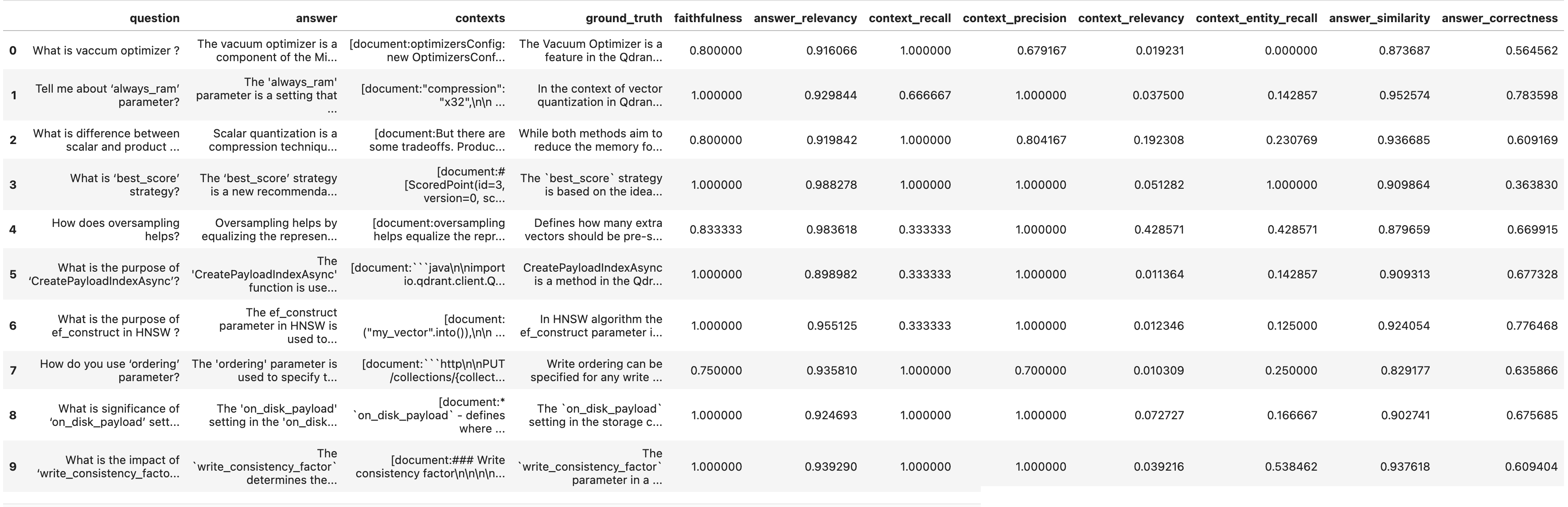

Let's also look at the evaluation results with document retrieval window - 5:

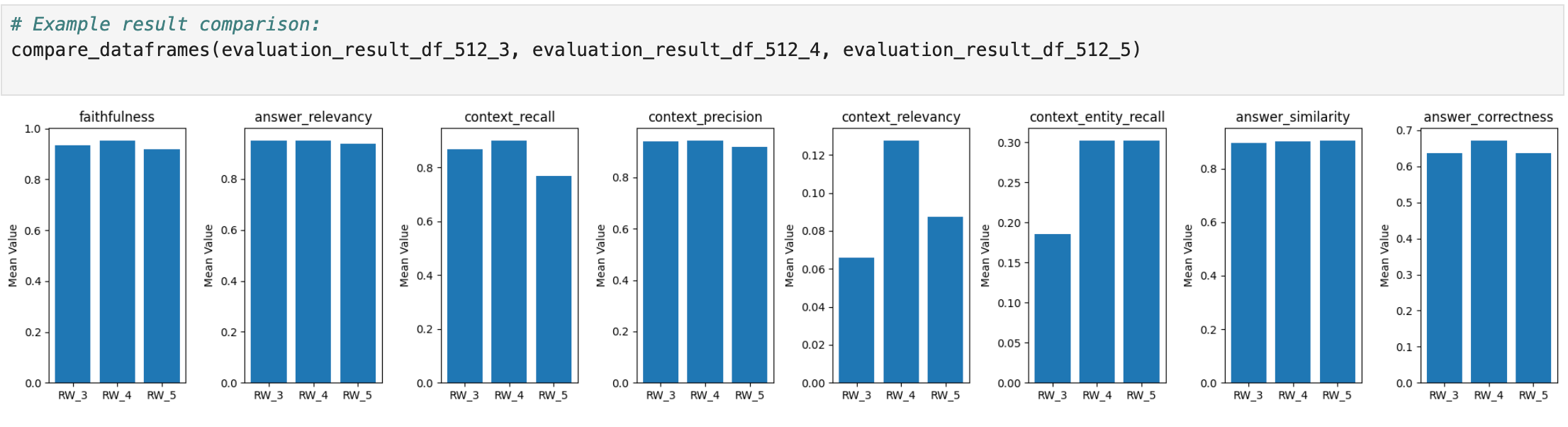

We've added a visualization to make our comparison and evaluation easier to comprehend. Let's see what's changed as a result of changing our document (chunk) retrieval window parameter:

Our retrieval window experiments clearly impact context_recall, context_relevancy, context_entity_recall, and answer_correctness metrics in the desired direction (towards 1.0).

Of course, retrieval window is not the only parameter that you can experiment with. We recommend that you also try experimenting with the other parameters listed above - chunk size, chunk overlap, embedding model, reranking - to tune the retrieval chain, and see how your RAG system metrics are affected. Performance on different metrics may vary depending on your use case.

In addition to the metrics above, RAGAS now supports Aspect Critique to permit users to evaluate RAG results on a range of predefined aspects, including harmfulness, maliciousness, coherence, correctness, conciseness, and, additionally, define their own aspects to evaluate results on other specific criteria. Aspect Critique output is binary, indicating whether results align with the aspect in question, or not.

Let's run Aspect Critique for the five predefined aspects above, and generate some results:

from datasets import Dataset from ragas.metrics.critique import harmfulness from ragas.metrics.critique import maliciousness from ragas.metrics.critique import coherence from ragas.metrics.critique import correctness from ragas.metrics.critique import conciseness from ragas import evaluate def show_aspect_critic(dataset): return evaluate(dataset,metrics=[harmfulness, maliciousness, coherence, correctness, conciseness,]) ## Evaluating the aspect critique for evaluation results with `retrieval window = 4` as below show_aspect_critic(rag_response_dataset_512_4).to_pandas()

Here are our results:

We can see alignment on coherence, correctness, and conciseness, and (unsurprisingly, given our questions) non-alignment on harmfulness and maliciousness.

We've walked you through an implementation of the RAGAS evaluation framework - from creating an evaluation dataset (questions, answers, and ground-truth data), through elucidating the RAGAS metrics, and using them to evaluate a sample naive RAG system. Compared with using LLMs (T5 and OpenAI), RAGAS appeared to perform more efficiently, with more flexibility for evaluating the most critical aspects of a QA system, granular metrics, and e2e metrics in RAG system retrieval and generation.

In our next article, we'll do a code walkthrough of Arize Phoenix - another framework for evaluating RAG systems.

The complete version of this article's code and notebook is available at - https://github.com/qdrant/qdrant-rag-eval/tree/master/workshop-rag-eval-qdrant-ragas.

Don’t forget to star and contribute your experiments. Feedback and suggestions are welcome.

See you in article 3!

Stay updated with VectorHub

Continue Reading