We look at the limitations of not just LLMs but also standard RAG solutions to LLM's knowledge and reasoning gaps, and examine the ways Knowledge Graphs combined with vector embeddings can fill these gaps - through graph embedding constraints, judicious choice of reasoning techniques, careful retrieval design, collaborative filtering, and flywheel learning.

Large Language Models (LLMs) mark a watershed moment in natural language processing, creating new abilities in conversational AI, creative writing, and a broad range of other applications. But they have limitations. While LLMs can generate remarkably fluent and coherent text from nothing more than a short prompt, LLM knowledge is not real-world data, but rather restricted to patterns learned from training data. In addition, LLMs can't do logical inference or synthesize facts from multiple sources; as queries become more complex and open-ended, LLM responses become contradictory or nonsense.

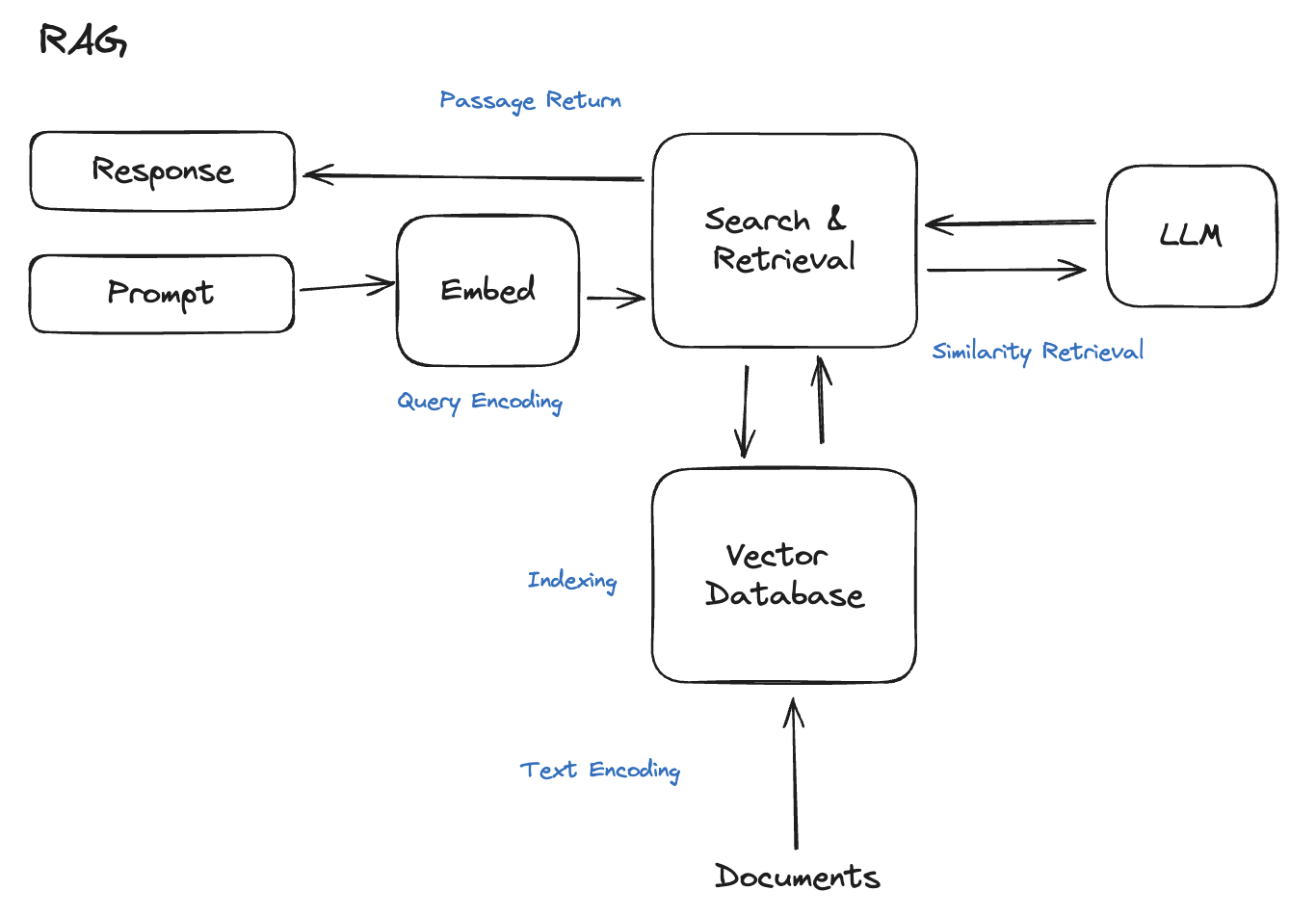

Retrieval Augmented Generation (RAG) systems have filled some of the LLM gaps by surfacing external source data using semantic similarity search on vector embeddings. Still, because RAG systems don't have access to network structure data (the interconnections between contextual facts), they struggle to achieve true relevance, aggregate facts, and perform chains of reasoning.

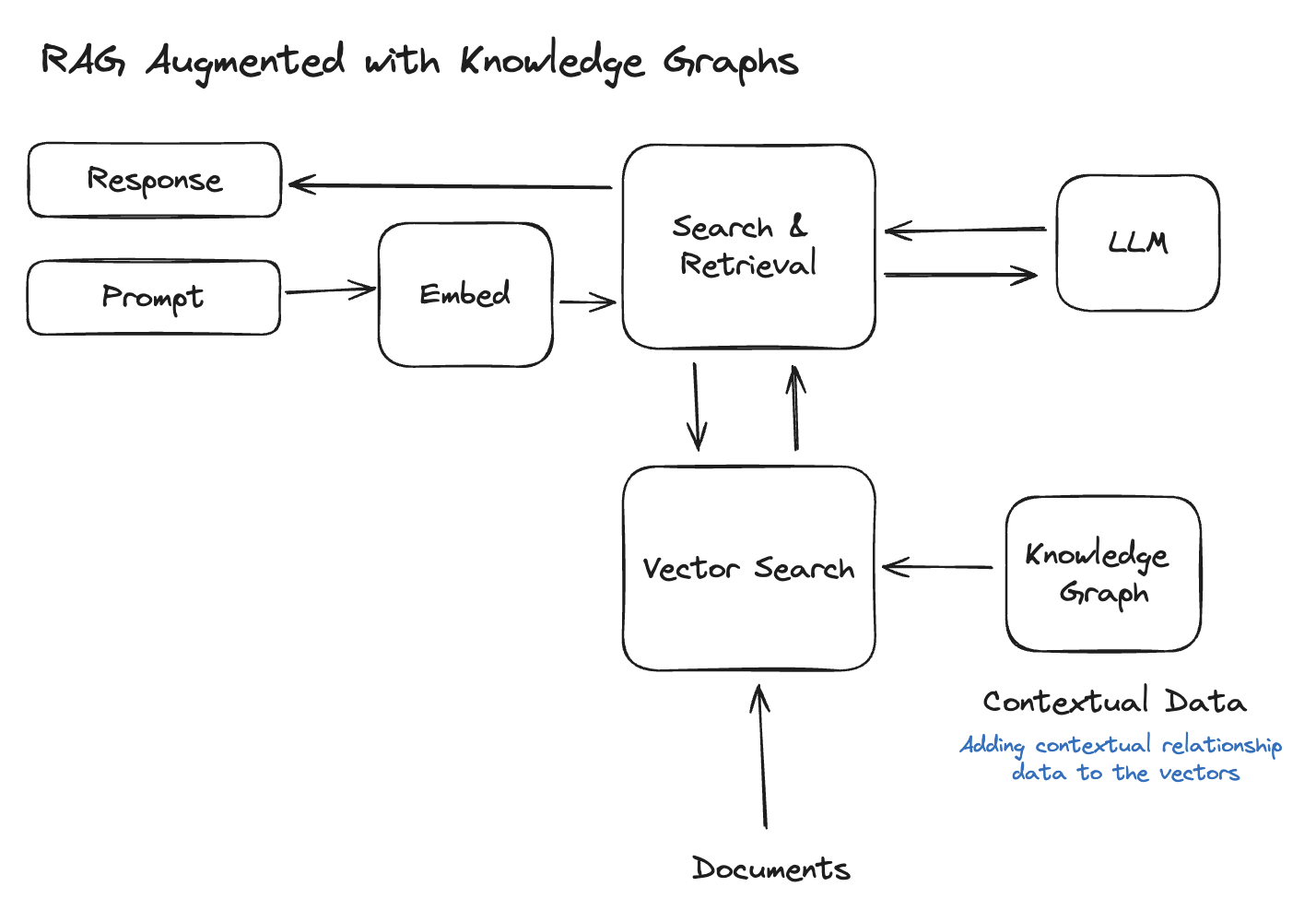

Knowledge Graphs (KGs), by encoding real-world entities and their connections, overcome the above deficiencies of pure vector search. KGs enable complex, multi-hop reasoning, across diverse data sources, thereby representing a more comprehensive understanding of the knowledge space.

Let's take a closer look at how we can combine vector embeddings and KGs, fusing surface-level semantics, structured knowledge, and logic to unlock new levels of reasoning, accuracy, and explanatory ability in LLMs.

We start by exploring the inherent weaknesses of relying on vector search in isolation, and then show how to combine KGs and embeddings complementarily, to overcome the limitations of each.

Most RAG systems employ vector search on a document collection to surface relevant context for the LLM. This process has several key steps:

This RAG Vector Search pipeline has several key limitations:

RAG, because it focuses only on semantic similarity, is unable to reason across content, so it fails to really understand not only queries but also the data RAG retrieves. The more complex the query, the poorer RAG's results become.

Knowledge Graphs, on the other hand, represent information in an interconnected network of entities and relationships, enabling more complex reasoning across content.

How do KGs augment retrieval?

In sum, whereas RAG performs matching on disconnected nodes, KGs enable graph traversal search and retrieval of interconnected contextual, search for query-relevant facts, make the ranking process transparent, and encode structured facts, relationships, and context to enable complex, precise, multi-step reasoning. As a result, compared to pure vector search, KGs can improve relevance and explanatory power.

But KG retrieval can be optimized further by applying certain constraints.

Knowledge Graphs represent entities and relationships that can be vector embedded to enable mathematical operations. These representations and retrieval results can be improved further by adding some simple but universal constraints:

In addition to improving interpretability, ensuring expected logic rules, permitting evidence-based rule confidence levels, and improving pattern learning, constraints can also:

In short, applying some simple constraints can augment Knowledge Graph embeddings to produce more optimized, explainable, and logically compliant representations, with inductive biases that mimic real-world structures and rules, resulting in more accurate and interpretable reasoning, without much additional complexity.

Knowledge Graphs require reasoning to derive new facts, answer queries, and make predictions. But there are a diverse range of reasoning techniques, whose respective strengths can be combined to fit the requirements of specific use cases.

| Reasoning framework | Method | Pros | Cons |

|---|---|---|---|

| Logical Rules | Express knowledge as logical axioms and ontologies | Sound and complete reasoning through theorem proving | Limited uncertainty handling |

| Graph Embeddings | Embed KG structure for vector space operations | Handle uncertainty | Lack expressivity |

| Neural Provers | Differentiable theorem proving modules combined with vector lookups | Adaptive | Opaque reasoning |

| Rule Learners | Induce rules by statistical analysis of graph structure and data | Automate rule creation | Uncertain quality |

| Hybrid Pipeline | Logical rules encode unambiguous constraints | Embeddings provide vector space operations. Neural provers fuse benefits through joint training. | |

| Explainable Modeling | Use case-based, fuzzy, or probabilistic logic to add transparency | Can express degrees uncertainty and confidence in rules | |

| Iterative Enrichment | Expand knowledge by materializing inferred facts and learned rules back into the graph | Provides a feedback loop |

The key to creating a suitable pipeline is identifying the types of reasoning required and mapping them to the right combination of appropriate techniques.

Retrieving knowledge Graph facts for the LLM introduces information bottlenecks. Careful design can mitigate these bottlenecks by ensuring relevance. Here are some methods for doing that:

To preserve quality information flow to the LLM to maximize its reasoning ability, you need to strike a balance between granularity and cohesiveness. KG relationships help contextualize isolated facts. Techniques that optimize the relevance, structure, explicitness, and context of retrieved knowledge help maximize the LLM's reasoning ability.

Knowledge Graphs provide structured representations of entities and relationships. KGs empower complex reasoning through graph traversals, and handle multi-hop inferences. Embeddings encode information in the vector space for similarity-based operations. Embeddings enable efficient approximate search at scale, and surface latent patterns.

Combining KGs and embeddings permits their respective strengths to overcome each other’s weaknesses, and improve reasoning capabilities, in the following ways:

While KGs enable structured knowledge representation and reasoning, embeddings provide the pattern recognition capability and scalability of neural networks, augmenting reasoning capabilities in the kinds of language AI that require both statistical learning and symbolic logic.

You can use collaborative filtering's ability to leverage connections between entities to enhance search, by taking the following steps:

Knowledge Graphs unlock new reasoning capabilities for language models by providing structured real-world knowledge. But KGs aren't perfect. They contain knowledge gaps, and have to update to remain current. Flywheel Learning can help remediate these problems, improving KG quality by continuously analyzing system interactions and ingesting new data.

Building an effective KG flywheel requires:

Each iteration through the loop further enhances the Knowledge Graph.

Flywheels can also handle high-volume ingestion of streamed live data.

Streaming data pipelines, while continuously updating the KG, will not necessarily fill all knowledge gaps. To handle these, flywheel learning also:

Each loop of the flywheel analyzes current usage patterns and remediates more data issues, incrementally improving the quality of the Knowledge Graph. The flywheel process thus enables the KG and language model to co-evolve and improve in accordance with feedback from real-world system operation. Flywheel learning provides a scaffolding for continuous, automated improvement of the Knowledge Graph, tailoring it to fit the language model's needs. This powers the accuracy, relevance, and adaptability of the language model.

In sum, to achieve human-level performance, language AI must be augmented by retrieving external knowledge and reasoning. Where LLMs and RAG struggle with representing the context and relationships between real-world entities, Knowledge Graphs excel. The Knowledge Graph's structured representations permit complex, multi-hop, logical reasoning over interconnected facts.

Still, while KGs provide previously missing information to language models, KGs can't surface latent patterns the way that language models working on vector embeddings can. Together, KGs and embeddings provide a highly productive blend of knowledge representation, logical reasoning, and statistical learning. And embedding of KGs can be optimized by applying some simple constraints.

Finally, KG's aren't perfect; they have knowledge gaps and need updating. Flywheel Learning can make up for KG knowledge gaps through live system analysis, and handle continuous, large volume data updates to keep the KG current. Flywheel learning thus enables the co-evolution of KGs and LLMs to achieve better reasoning, accuracy, and relevance in language AI applications.

The partnership of KGs and embeddings provides the building blocks moving language AI to true comprehension — conversation agents that understand context and history, recommendation engines that discern subtle preferences, and search systems that synthesize accurate answers by connecting facts. As we continue to improve our solutions to the challenges of constructing high-quality Knowledge Graphs, benchmarking, noise handling, and more, a key role will no doubt be played by hybrid techniques combining symbolic and neural approaches.

The author and editor have adapted this article with extensive content and format revisions from the author's previous article Embeddings + Knowledge Graphs, published in Towards Data Science, Nov 14, 2023.

Stay updated with VectorHub

Continue Reading