Not sure which vector DB fits your architecture? Get a free 15-min technical consultation → let's chat

Choosing a vector database for large-scale AI or search applications is less about comparing feature checklists and more about understanding your system’s architecture and constraints. The goal is to pick a solution that aligns with your use case’s scale, performance needs, and operational limits.

If you’re exploring what might work best for your use case or want to discuss different architecture choices, you can book a short technical chat using this link.

| Dimension | Key Considerations | Trade-Offs / Recommendations |

|---|---|---|

| Prototype → Production | - In-process vs. standalone deployment - Ephemeral vs. durable storage | - Use embedded/in-memory DBs for prototyping; migrate to managed or self-hosted clusters for production. - Ephemeral (fast but volatile) vs. durable (persistent, reliable). |

| Workload Type | - Write-heavy vs. read-heavy access patterns - Hybrid workloads | - Write-heavy: need async indexing, buffering, or real-time insert support. - Read-heavy: pre-built indexes (HNSW, IVF) offer speed at higher memory cost. - Hybrid: mix mutable "fresh" and static "main" indexes. |

| Memory vs. Disk | - In-memory vs. disk-backed indexing - Sharding and scaling - Metadata and payload size | - In-memory = fastest but costly and limited. - Disk-based = larger scale, slower but persistent. - Hybrid (memory + disk) balances both. - Store only embeddings in vector DB; offload large documents elsewhere. |

| Deployment Model | - Fully managed service vs. self-hosted - In-process vs. standalone server | - Managed: minimal ops, faster deployment, higher cost & less control. - Self-hosted: full control, cheaper at scale, higher ops burden. - Start embedded → move to networked as system scales. |

| Tenant Model | - Single-tenant vs. multi-tenant architecture | - Single-tenant: simpler, faster. - Multi-tenant: cost-efficient but adds isolation and scaling complexity. - Use namespaces/collections for isolation if needed. |

| Query Features | - Metadata filters - Hybrid (dense + sparse) search - Multi-vector or mixture-of-encoders - Specialized queries (geo, facets) | - Strong filtering support is critical for scalability. - Hybrid search merges semantics + keywords. - Multi-vector support or mixture-of-encoders simplifies multi-modal search. - Few DBs support geo/faceted search natively. |

| Indexing Strategy | - ANN vs. brute-force - HNSW, IVF, PQ, LSH variants - Index rebuild costs | - ANN offers massive speed-up with small recall trade-off. - Brute-force only for small datasets or accuracy-critical cases. - Evaluate on latency-recall curve, not index name. - Index rebuilds can be expensive - plan for them. |

| Operational Costs | - Expensive ops: index rebuilds, bulk inserts, unindexed filters, strict consistency - Cheap ops: flat inserts, ANN queries, buffered writes, lazy deletes | - Avoid frequent rebuilds and unindexed filters. - Use async writes and lazy deletes. - ANN queries are efficient; design updates to be batched. |

| Decision Factors | - Scale & latency goals - Operational capacity - Required query features - Acceptable trade-offs | - Focus on fit to architecture and constraints, not feature lists. - No universal "best" DB - choose based on workload, ops tolerance, and cost. |

Planning a migration from prototype to production?

Get a quick architecture review → Book here

When starting out, you might use an in-process or embedded vector store for quick prototyping. An in-process library (running inside your application) is simple to set up and offers full control over your data in development. This works well for single-user scenarios or small datasets, where you can load vectors into memory and iterate rapidly. However, as you move to production scale, the requirements change:

Deployment Model: Production deployments often demand a standalone or distributed database service rather than an embedded library. Fully managed services handle infrastructure scaling, replication, and maintenance for you, which accelerates deployment and reduces DevOps burden. You focus on using the database, not running it. In contrast, self-hosting gives complete control (important for data privacy or custom tuning) but means you manage servers, updates, and scaling yourself. The trade-off comes down to ease against control.

Ephemeral vs. Durable: During prototyping you might tolerate an ephemeral in-memory index. This can be faster since it avoids disk I/O, but it’s not suitable for production where persistence and recovery are required. For large-scale applications, ensure the vector DB supports disk-backed storage so indexes and data persist across restarts.

In summary, use lightweight embedded solutions for early development. As you scale, plan for a robust deployment: either a self-managed cluster tuned for your needs or a managed cloud service that offloads maintenance.

Unsure how to structure mutable vs. static indexes?

Get a free technical consult → Book here

Consider your application’s access patterns. Is it ingesting vectors constantly (high write throughput), or mainly querying existing vectors (read-heavy)? Different vector database architectures handle writes and reads differently:

High Write Throughput: If you need to index new vectors continuously, look for systems optimized for fast inserts and updates. Some indexes can handle incremental additions but with caveats (e.g. HNSW insert performance degradation over time). Many databases separate write/read paths by buffering new vectors and merging later.

Read-Heavy Patterns: If your dataset is mostly static, you can use heavier, more advanced index structures to accelerate reads. This adds memory overhead but delivers very low-latency queries at scale.

Balancing Both: Many real-time applications use a hybrid: a mutable index for recent data and a static ANN index for older data. Queries hit both, ensuring freshness + performance.

In short, match indexing strategy to your workload profile to avoid unnecessary latency or reindexing overhead.

Another fundamental consideration is whether your vector index can reside fully in memory or must be disk-backed:

In-Memory Indexes: Deliver the fastest latencies but are limited by RAM scale and cost. They may require heavy sharding for very large datasets.

Disk-Based Indexes: Scale beyond RAM limits and provide persistence, though with higher latency. Modern approaches (DiskANN, SPANN) optimize disk access for speed.

Hybrid Models: Many databases mix both keeping coarse structures in RAM while storing vectors on disk.

Payload Size: Large metadata or documents stored inside the vector DB increase memory/disk footprint. Often it's better to store embeddings in the DB and content elsewhere.

Choose based on your data scale and memory constraints to avoid bottlenecks or unnecessary complexity.

Operational considerations can be as important as raw performance. Vector databases come in both fully-managed cloud services and self-hosted software packages and even in-between (like enterprise appliances or cloud-managed open source). Your choice will affect development speed, cost structure, and maintenance work:

Fully Managed Services: Cloud-hosted vector DB offerings where the provider runs the infrastructure. They handle scaling, replication, upgrades, and often provide high-level APIs. Ideal when you want to integrate semantic search quickly without building ML ops expertise. The trade-off is less customization and potentially higher long-term cost.

Self-Hosted (Open Source or Enterprise): Running your own vector database (or using an open-source library) gives maximum flexibility and control, at the cost of operational complexity and DevOps investment.

In-Process Libraries vs. Standalone Servers: Embedded libraries are great for prototyping or single-application usage with no network overhead. Standalone services are better for multi-client architectures or horizontal scaling.

Ultimately, decide based on your priorities and resources. If speed to market and minimal ops are paramount, lean towards a managed service. If customizability, cost control at scale, or data sovereignty are top concerns, be prepared to self-host and invest in the necessary infrastructure work.

Consider whether your application serves one dataset or many isolated datasets (tenants). Multi-tenancy is common in SaaS platforms: a single system must handle vectors for multiple clients or domains, keeping their data separate. Your choice of vector DB should align with how you plan to isolate and organize data:

Single-Tenant (Simplicity): One large corpus or a few related ones, in one or a few indexes. Straightforward to manage and typically higher per-query performance.

Multi-Tenant Support: A single cluster serving many isolated datasets. Enables cost sharing but adds complexity around isolation, quotas, indexing strategies, and scaling.

In summary, design for multi-tenancy only if you need to. A vector DB that shines for one big dataset may not handle thousands of tiny ones efficiently, and vice versa.

Not all vector databases offer the same query capabilities beyond basic nearest neighbor search. Consider what search functionality your application requires:

Metadata Filters: Essential for combining similarity with constraints like category, time, or price. Strong pre-filtering support is critical for performance at scale.

Hybrid Search (Dense + Sparse): Combining semantic similarity with keyword or sparse scoring is important when some terms must match exactly.

Multi-Vector per Document or Mixture-of-Encoders: Multi-vector support helps for multi-modal content; mixture-of-encoders can also embed multiple signals into a single vector.

Geospatial and Other Specialized Queries: Check for native support if you need geo-distance, faceting, or analytical-style queries.

List the query types you expect and confirm the DB can support them efficiently.

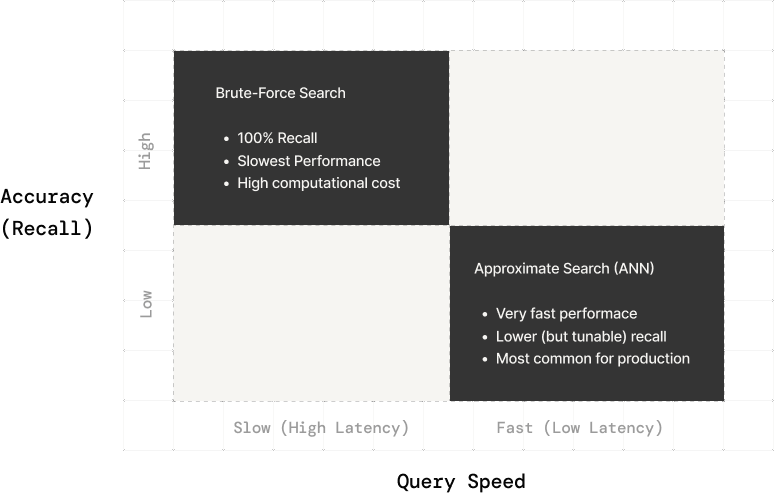

At the heart of any vector database is the index type it uses for nearest neighbor search. You’ll encounter terms like brute-force (flat) search, HNSW graphs, IVF, PQ, LSH, and others. Rather than focusing on names, focus on how index choice affects query latency and recall:

Brute-Force vs. Approximate: Brute-force delivers exact results but does not scale. ANN trades a bit of accuracy for orders-of-magnitude faster queries and is standard at scale.

Index Types: Different structures (HNSW, IVF, PQ, DiskANN, etc.) have different strengths but all live on a latency/recall trade-off curve. The important question is whether the DB can hit your targets on that curve.

Guaranteed Recall: For cases demanding 100 percent recall, a common pattern is ANN for candidates plus exact reranking on a smaller subset.

Build and Maintenance Costs: Some indexes build slowly or handle deletions poorly, which affects how often you can re-embed or restructure data.

Do not choose a vector DB just because it advertises a specific index type. Choose based on its ability to meet your latency and recall goals at your scale.

Finally, it’s useful to know which operations or features will cost the most in terms of performance or complexity:

Knowing this helps you design a system that leans on cheap operations in the hot path and schedules expensive ones carefully.

Selecting a vector database is a strategic decision that hinges on your specific needs and constraints. Instead of asking “which product has the most features?”, ask how each option fits your scenario in terms of architecture:

By focusing on these architectural and practical considerations, you move beyond marketing checklists and choose a vector database that will serve your application well in the long run. No single option is the best in all scenarios; the best vector database is the one that fits your constraints and makes your engineers’ lives easier while delivering the performance your application and users demand.

Need help evaluating vector DB architectures for your use case?

Get a technical review from Superlinked → Book here

Stay updated with VectorHub

Continue Reading